Silicon searches deep

Through spaces minds cannot reach—

New truths evolve

With every article and podcast episode, we provide comprehensive study materials: References, Executive Summary, Briefing Document, Quiz, Essay Questions, Glossary, Timeline, Cast, FAQ, Table of Contents, Index, Polls, 3k Image, Fact Check and

Comic at the very bottom of the page.

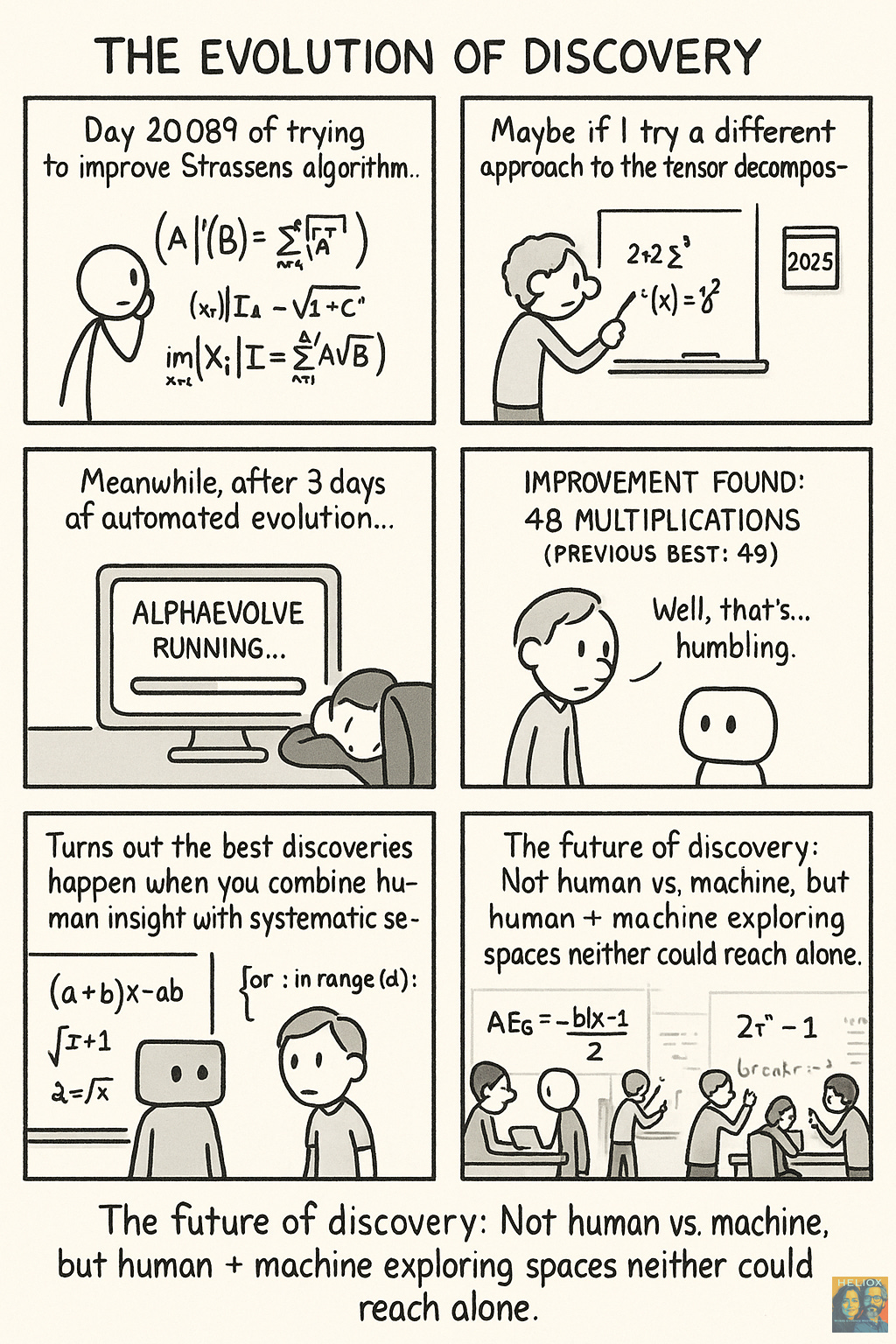

There's something deeply unsettling about watching a machine solve problems that have stumped humanity's brightest minds for over half a century. Not because it threatens our ego—though it certainly does that—but because it forces us to confront uncomfortable truths about the nature of knowledge, discovery, and what it means to be human in an age of artificial intelligence.

Google DeepMind's AlphaEvolve just broke a 56-year-old mathematical record. Not improved upon. Not incrementally advanced. Broke. The kind of breakthrough that makes you wonder what else we've been missing, what other solutions have been hiding in plain sight, waiting for the right kind of intelligence to find them.

The Uncomfortable Truth About Human Limitations

We like to think of mathematical breakthroughs as the domain of human genius—the eureka moments of brilliant minds working in isolation, or perhaps in collaboration with other brilliant minds. There's something romantic about the idea of Terence Tao or another mathematical luminary having that flash of insight that unlocks a problem that's been festering for decades.

But AlphaEvolve doesn't have flashes of insight. It doesn't have romantic notions about mathematical beauty or elegant proofs. It simply... searches. Systematically. Relentlessly. Without the cognitive biases, the emotional attachments, or the preconceived notions that both fuel and limit human creativity.

And that's what makes this so profound—and so terrifying.

The system didn't just stumble upon a solution to matrix multiplication optimization. It discovered algorithms that require only 48 scalar multiplications for 4x4 complex matrices, improving upon Strassen's 1969 algorithm. Forty-eight. After 56 years of human mathematicians, computer scientists, and theorists working on this problem, an AI found a better way by systematically exploring possibilities that humans either overlooked or dismissed.

The Evolutionary Advantage of Machine Thinking

What makes AlphaEvolve different isn't just its computational power—though that's certainly part of it. It's the evolutionary approach to problem-solving that mirrors natural selection but operates at the speed of silicon rather than biology.

The system takes existing code, generates variations, tests them against objective criteria, and keeps what works. It's Darwin's logic applied to algorithms, but without the luxury of human intuition to guide the process. This isn't necessarily better—human intuition has given us remarkable breakthroughs throughout history. But it's different in ways that reveal blind spots in how we approach complex problems.

Consider the kissing numbers problem—how many spheres can touch a central sphere in 11-dimensional space? AlphaEvolve found a way to pack one more sphere, improving the known lower bound from 592 to 593. One more sphere in 11-dimensional space might sound trivial, but it represents a fundamental advance in our understanding of geometric optimization that had eluded human mathematicians.

The system didn't get there through elegant reasoning or beautiful insights. It got there through systematic exploration of possibilities that humans, constrained by our three-dimensional thinking and cognitive limitations, simply couldn't navigate effectively.

The Partnership Paradox

Here's where things get interesting—and where the narrative around AI "replacing" humans reveals its limitations. AlphaEvolve doesn't work in isolation. It requires human experts to define the problems, set up the evaluation criteria, and interpret the results. Terence Tao and other mathematicians aren't being replaced; they're being amplified.

But this partnership raises uncomfortable questions about the nature of intellectual work. If the most creative and seemingly human endeavor—mathematical discovery—can be systematized and automated, what does that say about other forms of knowledge work?

The answer isn't simple displacement. The system's success at Google's infrastructure optimization shows us something more nuanced. When AlphaEvolve optimized data center scheduling, it recovered 0.7% of Google's entire compute fleet capacity. That's tens of thousands of machines worth of efficiency gained through smarter software.

The heuristic it discovered wasn't some incomprehensible black box. It was surprisingly simple and interpretable—a rule based on ratios of resources needed versus resources available. Human engineers could understand it, trust it, and maintain it. The AI found something elegant that humans had missed, but it found it in a language humans could speak.

The Deeper Implications

What AlphaEvolve represents isn't just a better way to solve mathematical problems. It's a preview of a future where the boundaries between human and artificial intelligence become increasingly blurred, not through some science fiction merger, but through complementary capabilities that amplify each other.

The system improved Gemini's training kernels by 23%, reducing overall training time by 1%. In the context of Google's scale, that's enormous—but it's also recursive. An AI system is literally helping to optimize the training of other AI systems. We're witnessing the emergence of a feedback loop where artificial intelligence improves the infrastructure that creates more artificial intelligence.

This isn't the AI apocalypse. It's something more subtle and potentially more transformative: the gradual augmentation of human cognitive capabilities through partnerships with systems that think differently than we do.

The Questions We're Not Asking

But here's what keeps me up at night: if AlphaEvolve can break mathematical records that have stood for 56 years, what other fundamental assumptions about the limits of knowledge are we carrying around? What other "unsolvable" problems are actually just beyond the reach of human-scale systematic exploration?

The system's success suggests that many problems we consider intractable might simply require more systematic exploration than human minds can practically conduct. The mathematical breakthroughs weren't the result of new theoretical insights—they came from better search strategies, more comprehensive exploration of possibility spaces.

This raises profound questions about the nature of human knowledge itself. How much of what we consider "impossible" or "unknown" is actually just "unsearched"? How many solutions to climate change, disease, poverty, or other global challenges are hiding in complexity spaces that human minds can't effectively navigate alone?

The Evolutionary Moment

We're living through an evolutionary moment, but not the kind of evolution we typically think about. This isn't biological evolution operating over millions of years. It's the evolution of intelligence itself, happening in real time, as we develop systems that can systematically explore possibilities beyond human cognitive reach.

AlphaEvolve represents a new kind of intellectual symbiosis—not the replacement of human intelligence, but its extension into domains where systematic exploration matters more than intuitive leaps. The system found solutions that humans missed not because it's smarter than us, but because it can search more thoroughly than we can.

The question isn't whether AI will replace human creativity. It's whether we're ready for a world where the most profound discoveries come not from individual genius, but from the systematic exploration of possibility spaces that no human mind could navigate alone.

That's the real breakthrough here—not the specific mathematical records that were broken, but the demonstration that there are entire categories of problems that might be solvable through systematic search rather than creative insight. Problems that have been waiting not for genius, but for the right kind of intelligence to find them.

And that changes everything about how we think about the future of human knowledge.

Link References

AlphaEvolve: A coding agent for scientific and algorithmic discovery

AlphaEvolve: A Gemini-powered coding agent for designing advanced algorithms

Episode Links

Other Links to Heliox Podcast

YouTube

Substack

Podcast Providers

Spotify

Apple Podcasts

Patreon

FaceBook Group

STUDY MATERIALS

Briefing

Date: May 16, 2025

Source: Excerpts from "AlphaEvolve: A coding agent for scientific and algorithmic discovery" by DeepMind

I. Executive Summary

AlphaEvolve is a novel, Gemini-powered evolutionary coding agent developed by Google DeepMind. It significantly advances the capabilities of state-of-the-art Large Language Models (LLMs) by autonomously generating, critiquing, and evolving code to solve complex scientific and engineering problems. Unlike previous methods, AlphaEvolve operates on entire code files, supports multiple programming languages, and can handle longer evaluation times, making it suitable for real-world optimization challenges.

Key achievements include:

Discovering a matrix multiplication algorithm for 4x4 complex-valued matrices that uses 48 scalar multiplications, an improvement over Strassen's algorithm after 56 years.

Developing more efficient scheduling algorithms for Google's data centers.

Finding a functionally equivalent simplification in hardware accelerator circuit design (TPUs).

Accelerating the training of the Gemini LLM itself.

Achieving state-of-the-art improvements in various mathematical problems across analysis, geometry, and combinatorics.

AlphaEvolve's success highlights the potential of LLM-orchestrated evolutionary approaches to drive significant advancements in computational and scientific discovery.

II. Core Functionality and Methodology

AlphaEvolve operates on an autonomous pipeline of LLMs that iteratively improve algorithms by making direct changes to code. This process is grounded in code execution and automatic evaluation, which allows AlphaEvolve to "avoid any incorrect suggestions from the base LLM."

Key features and distinctions from prior work (e.g., FunSearch):

Evolutionary Approach: AlphaEvolve utilizes an evolutionary process, where "continuously receiving feedback from one or more evaluators, AlphaEvolve iteratively improves the algorithm, potentially leading to new scientific and practical discoveries." This involves storing and reusing previously generated programs from a database.

Scope of Evolution: Evolves entire code files (up to hundreds of lines of code), rather than just single functions (10-20 lines).

Language Agnostic: Can evolve code in "any language," unlike FunSearch which is limited to Python.

Evaluation Flexibility: Can evaluate for hours, in parallel, on accelerators, as opposed to requiring fast evaluation (≤ 20 minutes on 1 CPU).

LLM Usage: Benefits from state-of-the-art LLMs and requires "thousands of LLM samples suffice" compared to millions used by FunSearch.

Context and Feedback: Leverages "rich context and feedback in prompts" to guide the LLMs.

Multi-objective Optimization: Capable of "simultaneously optimize multiple metrics."

Operational Mechanism: The system takes an "Initial program with components to evolve" and, through a "Distributed Controller Loop," it:

Samples a parent_program and inspirations from a database.

Builds a prompt using the parent program and inspirations.

An LLM generates a diff (proposed change).

The diff is applied to create a child_program.

An evaluator executes the child_program and returns results.

The child_program and its results are added to the database.

The evaluation function (evaluate) is a user-provided Python function with a fixed input/output signature, returning a dictionary of scalars (scores). This function can range from simple checks to extensive computations, such as running a search algorithm or training an ML model.

III. Key Discoveries and Applications

AlphaEvolve has demonstrated broad applicability across diverse computational and scientific domains, achieving novel, provably correct algorithms and significant optimizations.

A. Algorithmic and Infrastructural Optimizations:

Matrix Multiplication: Discovered a search algorithm that found a procedure to multiply two 4 × 4 complex-valued matrices using 48 scalar multiplications, marking "the first improvement, after 56 years, over Strassen’s algorithm in this setting." AlphaEvolve either matched or surpassed the best known rank for most matrix sizes considered.

Example code changes (diffs) show extensive modifications to optimizer settings, weight initialization, loss functions (including novel "discretization loss" and "hallucination loss"), and hyperparameter sweeps. These required "15 mutations during the evolutionary process."

Data Center Scheduling: Developed a more efficient scheduling algorithm for Google's data centers. The problem is framed as a vector bin-packing problem, where AlphaEvolve optimizes a heuristic function that assigns priority scores to machines for pending jobs. This optimization is "effectively correct by construction" as it influences ranking of already-available machines.

Gemini Kernel Engineering: Achieved an average 23% kernel speedup across all kernels and a 1% reduction in Gemini’s overall training time by optimizing tiling heuristics for matrix multiplication operations in JAX/Pallas kernels. This reduced optimization time "from several months of dedicated engineering effort to just days of automated experimentation." The discovered heuristic is deployed in production, marking "a novel instance where Gemini, through the capabilities of AlphaEvolve, optimizes its own training process."

Hardware Circuit Design (TPUs): Found a "simple code rewrite that removed unnecessary bits" in a Verilog implementation of a key TPU arithmetic circuit, reducing area and power consumption while preserving functionality. This represents "Gemini’s first direct contribution to TPU arithmetic circuits."

FlashAttention Kernel Optimization: Directly optimized XLA-generated Intermediate Representations (IRs) for the FlashAttention kernel, even though IRs are typically for debugging and already highly optimized. The modifications were confirmed numerically correct and rigorously validated by human experts.

B. Mathematical Discoveries (SOTA-breaking constructions):

AlphaEvolve has produced improvements in various mathematical problems:

Autocorrelation Inequalities:First Autocorrelation Inequality (C1): Improved the upper bound from 1.5098 to 1.5053 for non-negative functions.

Second Autocorrelation Inequality (C2): Improved the lower bound from 0.8892 to 0.8962.

Third Autocorrelation Inequality (C3): Improved the upper bound from 1.4581 to 1.4557 for functions allowing positive and negative values.

Uncertainty Inequality (C4): Refined constants to improve the upper bound from 0.3523 to 0.3521.

Erdős’s Minimum Overlap Problem (C5): Found a step function that slightly improved the upper bound from 0.380927 to 0.380924.

Sums and Differences of Finite Sets (C6): Addressed a problem relating to the size of sumsets and difference sets of finite integers.

Geometry and Packing Problems:Packing Unit Regular Hexagons: Improved best known bounds for packing 11 and 12 unit hexagons into a larger regular hexagon, reducing side lengths from 3.943 to 3.931 (for 11 hexagons) and 4.0 to 3.942 (for 12 hexagons).

Minimizing Ratio of Max to Min Distance: Found new constructions for packing points in 2D and 3D space, improving the ratio from √12.890 to √12.889266112 (16 points in 2D) and from √4.168 to √4.165849767 (14 points in 3D).

Heilbronn Problem (Triangles & Convex Regions): Improved bounds for maximizing the area of the smallest triangle formed by points within a unit-area triangle (for n=11, from 0.036 to 0.0365) and within convex regions (for n=13, from 0.0306 to 0.0309; for n=14, from 0.0277 to 0.0278).

Kissing Number in Dimension 11: Improved the lower bound on the kissing number from 592 to 593 by finding a configuration of 593 non-overlapping unit spheres.

Packing Circles to Maximize Sum of Radii: Found new constructions for packing circles within a unit square, improving the sum of radii for 26 circles (from 2.6340 to 2.6358) and 32 circles, and for 21 circles in a rectangle.

IV. Ablation Studies

Ablation studies on matrix multiplication tensor decomposition and the kissing number problem highlight the importance of AlphaEvolve's key components:

Evolutionary Approach: The "No evolution" setting, which repeatedly feeds the same initial program to the LLM, performs significantly worse, demonstrating the critical role of the evolutionary process in continuous improvement.

Context in Prompts: The "No context in the prompt" setting also shows degraded performance, underscoring the benefit of providing "problem-specific context" to the LLM.

Full-file Evolution: The ability to evolve entire code files ("No full-file evolution") is crucial for complex tasks.

Large LLMs: AlphaEvolve "benefits from SOTA LLMs" unlike some prior methods that saw "no benefit from larger" LLMs, indicating that powerful base models are an important component.

V. Strategic Implications

AlphaEvolve represents a significant step towards automated scientific and algorithmic discovery. Its ability to:

Optimize critical infrastructure (data center scheduling, hardware design, LLM training) directly contributes to efficiency and cost savings for large-scale computational operations.

Make foundational mathematical discoveries demonstrates its potential to advance theoretical knowledge in fields like combinatorics, geometry, and number theory.

Bridge the gap between LLM code generation and robust, verifiable solutions through its evolutionary and evaluation pipeline.

Reduce engineering bottlenecks by automating complex optimization tasks, freeing up human experts for higher-level problems.

Communicate in standard engineering languages (e.g., Verilog) fosters trust and simplifies adoption in production environments.

The continuous self-improvement loop, where Gemini (through AlphaEvolve) optimizes its own training process, points towards a future of increasingly autonomous and self-improving AI systems. This paradigm could revolutionize how we approach complex problem-solving in science and engineering.

Quiz & Answer Key

Answer each question in 2-3 sentences.

What is the primary objective of AlphaEvolve as an evolutionary coding agent?

How does AlphaEvolve address and prevent "hallucinations" or incorrect suggestions from the underlying LLM?

Name three distinct real-world computational problems at Google where AlphaEvolve demonstrated significant improvements.

In the context of matrix multiplication, what notable historical improvement did AlphaEvolve achieve over Strassen's algorithm?

What is the "evaluate" function in AlphaEvolve, and why is its fixed input/output signature important?

Explain the concept of "tiling strategy" in kernel optimization and how AlphaEvolve contributes to it.

How did AlphaEvolve contribute to the design of Google's Tensor Processing Units (TPUs)?

Describe the "No evolution" ablation study and what it aims to understand regarding AlphaEvolve's components.

AlphaEvolve improved the lower bound for the kissing number problem in 11 dimensions. What was the previous record and what was the new record found by AlphaEvolve?

Beyond computational infrastructure, list two categories of mathematical problems where AlphaEvolve discovered state-of-the-art breaking constructions.

Answer Key

The primary objective of AlphaEvolve is to substantially enhance the capabilities of state-of-the-art Large Language Models (LLMs) for challenging tasks like open scientific problems and optimizing critical computational infrastructure. It does this by autonomously improving algorithms through direct code changes using an evolutionary approach.

AlphaEvolve prevents incorrect suggestions from the base LLM by grounding the LLM-directed evolution process with code execution and automatic evaluation. This evaluation mechanism provides continuous feedback, allowing AlphaEvolve to avoid propagating invalid code changes.

AlphaEvolve developed a more efficient scheduling algorithm for data centers, found a functionally equivalent simplification in the circuit design of hardware accelerators (TPUs), and accelerated the training of the Gemini LLM itself.

AlphaEvolve discovered a search algorithm that found a procedure to multiply two 4x4 complex-valued matrices using 48 scalar multiplications, offering the first improvement in 56 years over Strassen’s algorithm in this specific setting.

The evaluate function is a user-provided Python function with a fixed input/output signature that returns a dictionary of scalars. Its importance lies in providing a quantifiable score for the evolved code, enabling AlphaEvolve to receive feedback and iteratively improve the algorithm.

The tiling strategy in kernel optimization involves dividing large matrix multiplication computations into smaller subproblems to better balance computation with data movement, crucial for accelerating overall computation. AlphaEvolve optimizes these tiling heuristics by iteratively exploring and refining candidate code to minimize kernel runtime on various input shapes.

AlphaEvolve optimized an already highly optimized Verilog implementation of a key TPU arithmetic circuit, finding a simple code rewrite that reduced both area and power consumption while preserving functionality. This marks Gemini's first direct contribution to TPU arithmetic circuits via AlphaEvolve.

The "No evolution" ablation study considers an alternative approach where the same initial program is repeatedly fed to the language model, without storing and utilizing previously generated programs. It aims to understand the importance and efficacy of AlphaEvolve's evolutionary approach in achieving its results.

For the kissing number problem in 11 dimensions, the previous record was 592 non-overlapping unit spheres. AlphaEvolve improved this by finding a configuration of 593 such spheres, thus raising the lower bound.

AlphaEvolve discovered state-of-the-art breaking mathematical constructions in areas such as analysis (e.g., autocorrelation and uncertainty inequalities) and geometry (e.g., packing problems like hexagons and circles, and minimizing maximum-to-minimum distance ratios).

Essay Questions

Compare and contrast AlphaEvolve with FunSearch, highlighting their key differences in capabilities, application scope, and resource requirements. Discuss how these differences enable AlphaEvolve to tackle more complex and broader scientific/engineering problems.

Analyze the role of "evolutionary computation" and "LLM-based code generation" in AlphaEvolve's success. Explain how these two components work in conjunction to achieve iterative algorithm improvement and discovery, referencing specific examples from the text.

Discuss the impact of AlphaEvolve on Google's internal computational infrastructure, providing detailed examples from the paper (e.g., data center scheduling, Gemini kernel engineering, TPU circuit design). How does AlphaEvolve accelerate the design process and improve efficiency in these critical areas?

Beyond practical engineering applications, elaborate on AlphaEvolve's contributions to fundamental mathematical and computer science problems. Choose at least three distinct mathematical problem categories mentioned in the text and describe the specific improvements AlphaEvolve made, including any historical context provided.

Evaluate the significance of AlphaEvolve's ability to communicate suggested changes directly in standard engineering languages (like Verilog for hardware design). Discuss how this feature fosters trust and simplifies adoption, contributing to the system's real-world deployment and impact.

Glossary of Key Terms

AlphaEvolve: An evolutionary coding agent developed by Google DeepMind that uses Large Language Models (LLMs) and an evolutionary approach to autonomously improve algorithms by making direct changes to code.

Large Language Models (LLMs): Advanced AI models trained on vast amounts of text data, capable of understanding, generating, and processing human language, and in AlphaEvolve's case, generating and critiquing code.

Evolutionary Computation: A paradigm inspired by biological evolution, where a population of candidate solutions is iteratively improved through processes like mutation, crossover, and selection based on a fitness function (in AlphaEvolve's case, code evaluation).

Code Superoptimization Agent: A system designed to find highly optimized versions of code, often aiming for improvements beyond what human experts or traditional compilers can achieve. AlphaEvolve functions as such an agent.

Automated Evaluation: The process by which AlphaEvolve automatically runs and scores candidate algorithms or code changes to determine their performance and correctness. This feedback loop is crucial for the evolutionary process.

Heuristic Function: A rule or algorithm designed to solve a problem more quickly or efficiently, especially when exact solutions are impractical. AlphaEvolve can discover and optimize such functions, like those used in job scheduling or kernel tiling.

Kernel Optimization: The process of improving the performance of "kernels," which are small, highly optimized programs that perform critical computational tasks on hardware accelerators like GPUs or TPUs.

Tiling Strategy: A technique in parallel computing and kernel optimization where a large computation (like matrix multiplication) is broken down into smaller, manageable "tiles" or blocks to improve data locality and memory access patterns.

Tensor Processing Units (TPUs): Specialized AI accelerators developed by Google for machine learning workloads, particularly neural networks. AlphaEvolve was used to optimize circuits within TPUs.

Register-Transfer Level (RTL) Optimization: A critical step in chip design that involves manually rewriting hardware descriptions (often in languages like Verilog) to improve metrics such as power consumption, performance, and silicon area.

Matrix Multiplication Algorithms: Methods for multiplying matrices. AlphaEvolve discovered a new, faster algorithm for 4x4 complex-valued matrices, improving upon Strassen's algorithm.

Kissing Number Problem: A mathematical packing problem that asks for the maximum number of non-overlapping unit spheres that can touch a central unit sphere in a given dimension. AlphaEvolve improved the lower bound for this problem in 11 dimensions.

Erdős's Minimum Overlap Problem: A problem in combinatorics and number theory related to the overlap of sets, where AlphaEvolve established a new upper bound.

Heilbronn Problem: A problem in discrete geometry concerning point sets in a given region, aiming to maximize the area of the smallest triangle formed by any three points. AlphaEvolve improved bounds for variants of this problem.

Ablation Study: An experimental technique in machine learning where parts of a system are removed or "ablated" to understand their contribution to the overall performance. AlphaEvolve's paper includes ablation studies on its evolutionary approach and context usage.

Hallucination (in LLMs): A phenomenon where an LLM generates outputs that are plausible but factually incorrect or inconsistent with the provided context. AlphaEvolve's evaluation mechanism aims to mitigate this.

Verilog: A hardware description language (HDL) used for modeling electronic systems at the register-transfer level, used in the design of digital circuits and systems. AlphaEvolve generated Verilog code changes.Timeline of Main Events

Timeline

1955:

Erdős's Minimum Overlap Problem: P. Erdős introduces the Minimum Overlap Problem.

1969:

Strassen's Algorithm: V. Strassen publishes an algorithm for multiplying two 4x4 matrices using 49 scalar multiplications. This algorithm serves as the state-of-the-art for 56 years in this specific setting.

1971:

Hopcroft and Kerr's Matrix Multiplication: J. E. Hopcroft and L. R. Kerr publish work on minimizing the number of multiplications necessary for matrix multiplication, noting 33 multiplications for <2,4,5> matrices.

1976:

Laderman's Matrix Multiplication: J. D. Laderman publishes a non-commutative algorithm for multiplying 3x3 matrices using 23 multiplications.

1987:

Superoptimizer Concept: H. Massalin introduces the concept of "Superoptimizer," looking at the smallest program.

1994:

Genetic Programming: J. R. Koza publishes work on genetic programming as a means for programming computers by natural selection.

2007:

Sums and Differences of Finite Sets: K. Gyarmati, F. Hennecart, and I. Z. Ruzsa publish on sums and differences of finite sets.

2009:

Symbolic Regression: M. Schmidt and H. Lipson publish work on distilling free-form natural laws from experimental data, relevant to symbolic regression.

2010:

Autoconvolution Bounds: M. Matolcsi and C. Vinuesa publish improved bounds on the supremum of autoconvolutions.

Generalized Sidon Sets: C. Vinuesa del Rio publishes a PhD thesis on Generalized Sidon sets.

2012:

Kissing Number Survey: P. Boyvalenkov, S. Dodunekov, and O. Musin publish a survey on kissing numbers.

2013:

Bilinear Complexity of Matrix Multiplication: A. V. Smirnov publishes on the bilinear complexity and practical algorithms for matrix multiplication.

Stochastic Superoptimization: E. Schkufza, R. Sharma, and A. Aiken publish on stochastic superoptimization.

Foundations of Genetic Programming: W. B. Langdon and R. Poli publish "Foundations of Genetic Programming."

2015:

Adam Optimizer: D. P. Kingma and J. Ba introduce the Adam optimizer.

Google's Borg Cluster Management: A. Verma et al. publish on large-scale cluster management at Google with Borg.

2016:

Minimum Overlap Problem Revisited: J. K. Haugland publishes on the minimum overlap problem revisited.

2017:

Hermite Polynomials and Uncertainty Principle: F. Gonçalves, D. O. e Silva, and S. Steinerberger publish on Hermite polynomials, linear flows on the torus, and an uncertainty principle for roots.

Attention Mechanism: A. Vaswani et al. publish "Attention is all you need," introducing the transformer architecture.

2018:

JAX Release: J. Bradbury et al. release JAX, a library for composable transformations of Python+NumPy programs.

2021:

AlphaFold: J. Jumper et al. publish "Highly accurate protein structure prediction with AlphaFold," a major breakthrough in protein folding.

Biological Structure and Function with LLMs: A. Rives et al. publish on biological structure and function emerging from scaling unsupervised learning to protein sequences.

Smirnov's Bilinear Algorithms: A. Smirnov publishes "Several bilinear algorithms for matrix multiplication problems <3,P,Q>."

2022:

FlashAttention: T. Dao et al. publish "FlashAttention: Fast and memory-efficient exact attention with IO-awareness."

Kissing Number Lower Bound: M. Ganzhinov publishes a lower bound of 592 for the kissing number in 11 dimensions.

AlphaCode: Y. Li et al. publish "Competition-level code generation with AlphaCode."

Faster Matrix Multiplication with Reinforcement Learning (AlphaTensor): A. Fawzi et al. publish "Discovering faster matrix multiplication algorithms with reinforcement learning" (AlphaTensor). This work found a rank-47 algorithm for <3,4,5> matrices and a rank-76 algorithm for <4,5,5> matrices.

Evolving Symbolic Density Functionals: H. Ma et al. publish on evolving symbolic density functionals.

Controllable Protein Design with Language Models: N. Ferruz and B. Höcker publish on controllable protein design with language models.

Smirnov's Bilinear Algorithm for 4x4x9 matrices: A. Smirnov publishes "Bilinear algorithm for matrix multiplication <4x4x9; 104>."

2023:

Autonomous Chemical Research with LLMs: D. A. Boiko et al. publish on autonomous chemical research with large language models.

Symbolic Discovery of Optimization Algorithms: X. Chen et al. publish on symbolic discovery of optimization algorithms.

Prompt-breeder: C. Fernando et al. publish "Prompt-breeder: Self-referential self-improvement via prompt evolution."

Learning Skillful Weather Forecasting: R. Lam et al. publish on learning skillful medium-range global weather forecasting.

Faster Sorting Algorithms with Deep RL: D. J. Mankowitz et al. publish "Faster sorting algorithms discovered using deep reinforcement learning."

Reflexion: N. Shinn et al. publish "Reflexion: Language agents with verbal reinforcement learning."

FunSearch (Romera-Paredes et al.): B. Romera-Paredes et al. publish "Mathematical discoveries from program search with large language models" (FunSearch). This work is noted as a predecessor to AlphaEvolve, evolving single functions up to 10-20 lines of Python code, needing fast evaluation.

Evolutionary-scale Protein Structure Prediction: Z. Lin et al. publish on evolutionary-scale prediction of atomic-level protein structure with a language model.

AutoGen: Q. Wu et al. publish "AutoGen: Enabling next-gen LLM applications via multi-agent conversation."

DrugAssist: G. Ye et al. publish "DrugAssist: A large language model for molecule optimization."

ReAct: S. Yao et al. publish "React: Synergizing reasoning and acting in language models."

LeanDojo: K. Yang et al. publish "Leandojo: Theorem proving with retrieval-augmented language models."

2024:

Learning High-Accuracy Error Decoding for Quantum Processors: J. Bausch et al. publish on learning high-accuracy error decoding for quantum processors.

Augmenting LLMs with Chemistry Tools: A. M. Bran et al. publish on augmenting large language models with chemistry tools.

Evolving Code with LLM: E. Hemberg et al. publish on evolving code with a large language model.

CRISPR-GPT: K. Huang et al. publish "CRISPR-GPT: An LLM agent for automated design of gene-editing experiments."

LLMatDesign: S. Jia et al. publish "LLMatDesign: Autonomous materials discovery with large language models."

LLMs as Evolution Strategies: R. Lange et al. publish "Large language models as evolution strategies."

LLMs as Data Analysts: Z. Rasheed et al. publish "Can large language models serve as data analysts? a multi-agent assisted approach for qualitative data analysis."

LLM Guided Evolution: C. Morris et al. publish "Llm guided evolution-the automation of models advancing models."

SciMON: Q. Wang et al. publish "SciMON: Scientific inspiration machines optimized for novelty."

LLMs in Bioinformatics: O. A. Sarumi and D. Heider publish on large language models and their applications in bioinformatics.

LLMs for Scientific Hypotheses Discovery: Z. Yang et al. publish on large language models for automated open-domain scientific hypotheses discovery.

ReEvo: H. Ye et al. publish "ReEvo: Large language models as hyper-heuristics with reflective evolution."

Interesting Scientific Idea Generation: X. Gu and M. Krenn publish on interesting scientific idea generation using knowledge graphs and LLMs.

Embracing Foundation Models for Scientific Discovery: S. Guo et al. publish on embracing foundation models for advancing scientific discovery.

Genetic Programming for Production Scheduling: F. Zhang et al. publish "Genetic Programming for Production Scheduling."

Survey on Hallucination in LLMs: L. Huang et al. publish a survey on hallucination in large language models.

2025:

AlphaEvolve White Paper Publication: The white paper "AlphaEvolve: A coding agent for scientific and algorithmic discovery" is presented.

AlphaEvolve - Matrix Multiplication Discovery: AlphaEvolve discovers a search algorithm that finds a procedure to multiply two 4x4 complex-valued matrices using 48 scalar multiplications, the first improvement over Strassen’s algorithm in this setting after 56 years. It also surpasses state-of-the-art solutions for many other matrix multiplication tensor decompositions.

AlphaEvolve - Data Center Scheduling: AlphaEvolve develops a more efficient scheduling algorithm for data centers, discovering a heuristic function tailored to Google’s workloads.

AlphaEvolve - Hardware Accelerator Circuit Design: AlphaEvolve finds a functionally equivalent simplification in the circuit design of hardware accelerators (TPUs), specifically within the matrix multiplication unit. This improvement is integrated into an upcoming TPU.

AlphaEvolve - Gemini LLM Training Acceleration: AlphaEvolve accelerates the training of the Gemini LLM (its underpinning LLM) by optimizing tiling heuristics for a critical matrix multiplication kernel, resulting in an average 23% kernel speedup and a 1% reduction in Gemini's overall training time. This also reduces kernel optimization time from months to days.

AlphaEvolve - Mathematical Discoveries: AlphaEvolve discovers novel, provably correct algorithms surpassing state-of-the-art solutions in various mathematical and computer science problems:

Autocorrelation Inequalities: Improves upper and lower bounds for three different autocorrelation inequalities.

Uncertainty Inequality: Improves the upper bound for a specific uncertainty inequality.

Erdős' Minimum Overlap Problem: Establishes a new upper bound, slightly improving upon the previous record.

Geometry and Packing - Kissing Number Problem (11D): Improves the lower bound on the kissing number in 11 dimensions from 592 to 593.

Packing Hexagons: Improves the best-known bounds for packing 11 and 12 unit hexagons into a larger regular hexagon.

Minimizing Max/Min Distance Ratio: Finds new constructions improving best-known bounds for packing points to minimize the ratio of maximum to minimum pairwise distances in 2D and 3D.

Heilbronn Problem (Triangles and Convex Regions): Improves SOTA for the Heilbronn problem for triangles (n=11) and for convex regions (n=13, n=14).

Packing Circles: Finds new constructions improving best-known bounds for packing circles inside a unit square (26 and 32 circles) and a rectangle (21 circles) to maximize the sum of their radii.

AlphaEvolve - XLA IR Optimization: AlphaEvolve is challenged to directly optimize XLA-generated IRs for the FlashAttention kernel, aiming to minimize execution time for transformer models used in inference on GPUs.

Gemini 2.5 Release: The Gemini team releases Gemini 2.5, "Our most intelligent AI model."

Chemical Reasoning in LLMs: A. M. Bran et al. publish on chemical reasoning in LLMs unlocking steerable synthesis planning and reaction mechanism elucidation.

AI Co-scientist: J. Gottweis et al. publish "Towards an AI co-scientist."

LLM-driven Efficient Code Optimizer (ECO): H. Lin et al. publish "ECO: An LLM-driven efficient code optimizer for warehouse scale computers."

The AI CUDA Engineer: R. T. Lange et al. publish "The AI CUDA engineer: Agentic CUDA kernel discovery, optimization and composition."

LLM-Feynman: Z. Song et al. publish "LLM-Feynman: Leveraging large language models for universal scientific formula and theory discovery."

LLM-SR: P. Shojaee et al. publish "LLM-SR: Scientific equation discovery via programming with large language models."

LLM-based Scientific Agents Survey: S. Ren et al. publish "Towards scientific intelligence: A survey of LLM-based scientific agents."

Nature Language Model: Y. Xia et al. publish "Nature language model: Deciphering the language of nature for scientific discovery."

MOOSE-Chem: Z. Yang et al. publish "MOOSE-Chem: Large language models for rediscovering unseen chemistry scientific hypotheses."

Multi-objective Evolution of Heuristic: S. Yao et al. publish "Multi-objective evolution of heuristic using large language model."

Review of LLMs and Autonomous Agents in Chemistry: M. Caldas Ramos et al. publish "A review of large language models and autonomous agents in chemistry."

Discovering Symbolic Cognitive Models: P. S. Castro et al. publish on discovering symbolic cognitive models from human and animal behavior.

Generative Modelling for Mathematical Discovery: J. S. Ellenberg et al. publish on generative modelling for mathematical discovery.

Quantum Circuit Optimization with AlphaTensor: F. J. R. Ruiz et al. publish "Quantum Circuit Optimization with AlphaTensor."

Efficient Evolutionary Search over Chemical Space: H. Wang et al. publish on efficient evolutionary search over chemical space with large language models.

Flip Graphs and Matrix Multiplication: J. Moosbauer and M. Poole publish "Flip graphs with symmetry and new matrix multiplication schemes."

LLMs Ready for Materials Discovery?: S. Miret and N. M. A. Krishnan publish on whether LLMs are ready for real-world materials discovery.

LLMs Generate Novel Research Ideas?: C. Si, D. Yang, and T. Hashimoto publish on whether LLMs can generate novel research ideas.

GRAIL: S. Verma et al. publish on Graph edit distance and node alignment using LLM-generated code.

Cast of Characters

*Google DeepMind Researchers (Authors of AlphaEvolve White Paper - "Equal contributions" indicated by ):

Alexander Novikov*: A key contributor to the AlphaEvolve project.

Ngân Vũ*: A key contributor to the AlphaEvolve project.

Marvin Eisenberger*: A key contributor to the AlphaEvolve project.

Emilien Dupont*: A key contributor to the AlphaEvolve project.

Po-Sen Huang*: A key contributor to the AlphaEvolve project.

Adam Zsolt Wagner*: A key contributor to the AlphaEvolve project.

Sergey Shirobokov*: A key contributor to the AlphaEvolve project.

Borislav Kozlovskii*: A key contributor to the AlphaEvolve project.

Matej Balog*: A key contributor to the AlphaEvolve project, also listed as a senior author.

Francisco J. R. Ruiz: An author of the AlphaEvolve white paper.

Abbas Mehrabian: An author of the AlphaEvolve white paper.

M. Pawan Kumar: An author of the AlphaEvolve white paper.

Abigail See: An author of the AlphaEvolve white paper.

Swarat Chaudhuri: An author of the AlphaEvolve white paper.

George Holland: An author of the AlphaEvolve white paper.

Alex Davies: An author of the AlphaEvolve white paper, also contributed to "Advancing mathematics by guiding human intuition with AI."

Sebastian Nowozin: An author of the AlphaEvolve white paper.

Pushmeet Kohli: An author of the AlphaEvolve white paper, and also a key figure in several referenced works like AlphaFold, AlphaTensor, and "Advancing mathematics by guiding human intuition with AI."

Other Individuals Mentioned:

P. Erdős: A Hungarian mathematician who introduced the minimum overlap problem in combinatorics.

V. Strassen: A German mathematician who developed Strassen's algorithm for matrix multiplication, a foundational work that AlphaEvolve improved upon after 56 years.

J. E. Hopcroft: Co-author of a 1971 paper on minimizing multiplications for matrix multiplication.

L. R. Kerr: Co-author of a 1971 paper on minimizing multiplications for matrix multiplication.

J. D. Laderman: Author of a 1976 paper on a non-commutative algorithm for 3x3 matrix multiplication.

H. Massalin: Introduced the concept of "Superoptimizer" in 1987.

J. R. Koza: Published foundational work on Genetic Programming in 1994.

D. P. Kingma: Co-creator of the Adam optimizer (2015).

J. Ba: Co-creator of the Adam optimizer (2015).

J. Bradbury: Co-creator of JAX (2018).

D. Hassabis: Co-author on several cited works, including AlphaFold and AlphaTensor, indicating a leadership role at DeepMind.

D. Silver: Co-author on AlphaFold and AlphaTensor.

O. Vinyals: Co-author on AlphaFold, AlphaCode, and AlphaTensor.

B. Romera-Paredes: Lead author of the FunSearch paper, a predecessor to AlphaEvolve.

A. Fawzi: Co-author on FunSearch and AlphaTensor.

Yanislav Donchev: Led the application of AlphaEvolve to Gemini kernel engineering.

Richard Tanburn: Made significant contributions to the Gemini kernel engineering application.

Justin Chiu: Provided helpful advice regarding AlphaEvolve.

Julian Walker: Provided helpful advice regarding AlphaEvolve.

Jean-Baptiste Alayrac: Reviewed the AlphaEvolve work.

Dmitry Lepikhin: Reviewed the AlphaEvolve work.

Sebastian Borgeaud: Reviewed the AlphaEvolve work.

Jeff Dean: Reviewed the AlphaEvolve work, likely a high-level figure at Google/DeepMind.

Timur Sitdikov: Led the application of AlphaEvolve to TPU circuit design.

Georges Rotival: Provided the circuit evaluation infrastructure for TPU circuit design.

Kirk Sanders: Verified and validated results in the TPU design application.

Srikanth Dwarakanath: Verified and validated results in the TPU design application.

Indranil Chakraborty: Verified and validated results in the TPU design application.

Christopher Clark: Verified and validated results in the TPU design application.

Vinod Nair: Provided helpful advice regarding TPU circuit design.

Sergio Guadarrama: Provided helpful advice regarding TPU circuit design.

Dimitrios Vytiniotis: Provided helpful advice regarding TPU circuit design.

Daniel Belov: Provided helpful advice regarding TPU circuit design.

Kerry Takenaka: Reviewed the AlphaEvolve work related to TPU design.

Sridhar Lakshmanamurthy: Reviewed the AlphaEvolve work related to TPU design.

Parthasarathy Ranganathan: Reviewed the AlphaEvolve work related to TPU design.

Amin Vahdat: Reviewed the AlphaEvolve work related to TPU design.

Organizations/Entities:

Google DeepMind: The primary research organization responsible for developing and presenting AlphaEvolve.

Google: The parent company, benefiting from AlphaEvolve's optimizations in data centers and hardware.

Gemini: A large language model developed by Google DeepMind, whose training process was optimized by AlphaEvolve.

TPU (Tensor Processing Unit): Google's specialized hardware accelerators, where AlphaEvolve contributed to circuit design and kernel optimization.

Borg scheduler: Google's large-scale cluster management system, whose scheduling algorithm was made more efficient by AlphaEvolve.

JAX: A Python library for high-performance numerical computing, used in the context of Gemini kernel engineering.

Pallas: An extension to JAX that enables writing custom kernels for hardware accelerators.

XLA: A linear algebra compiler for machine learning, whose intermediate representations (IRs) were optimized by AlphaEvolve.

FunSearch: A prior method for mathematical discovery using LLMs and program search, developed by some of the AlphaEvolve authors, to which AlphaEvolve is compared.

FAQ

What is AlphaEvolve?

AlphaEvolve is an advanced coding agent developed by Google DeepMind that utilizes a combination of evolutionary computation and Large Language Models (LLMs) to automatically generate, critique, and evolve algorithms. Its primary goal is to solve complex scientific and engineering problems by making direct changes to code, learning from continuous feedback through automatic evaluation and code execution.

How does AlphaEvolve differ from previous automated discovery methods like FunSearch?

AlphaEvolve significantly expands upon prior methods like FunSearch by offering greater flexibility and capability. Key differences include:

Scope of Evolution: AlphaEvolve evolves entire code files, potentially hundreds of lines, whereas FunSearch typically evolves single functions (10-20 lines).

Language Agnostic: AlphaEvolve can evolve code in any language, while FunSearch focuses on Python.

Evaluation Time: AlphaEvolve can handle evaluations that take hours and run in parallel on accelerators, whereas FunSearch requires fast evaluations (under 20 minutes on one CPU).

LLM Usage: AlphaEvolve benefits significantly from state-of-the-art LLMs and requires fewer LLM samples (thousands versus millions for FunSearch).

Context and Metrics: AlphaEvolve utilizes rich context and feedback in its prompts and can optimize multiple metrics simultaneously, unlike FunSearch which uses minimal context and optimizes a single metric.

What are some of the practical applications and achievements of AlphaEvolve at Google?

AlphaEvolve has demonstrated significant practical impact within Google's computational infrastructure:

Data Center Scheduling: It developed a more efficient scheduling algorithm for data centers, leading to better resource utilization.

Hardware Accelerator Circuit Design: AlphaEvolve found a functionally equivalent simplification in the circuit design of hardware accelerators (TPUs), paving the way for direct AI contributions to hardware design.

LLM Training Acceleration: It accelerated the training of Gemini, the LLM underpinning AlphaEvolve itself, by discovering a heuristic that yielded a 23% kernel speedup and a 1% reduction in overall training time, drastically cutting optimization time from months to days.

What notable scientific discoveries has AlphaEvolve made in mathematics and computer science?

AlphaEvolve has achieved several provably correct and state-of-the-art breakthroughs in mathematics and computer science:

Faster Matrix Multiplication: It discovered a search algorithm that multiplies two 4x4 complex-valued matrices using 48 scalar multiplications, marking the first improvement over Strassen's algorithm in this setting after 56 years. It also matched or surpassed best-known ranks for numerous other matrix sizes.

Autocorrelation Inequalities: AlphaEvolve found improved bounds for several autocorrelation inequalities in analysis.

Erdős’ Minimum Overlap Problem: It established a new upper bound for this combinatorics problem.

Geometry and Packing Problems: AlphaEvolve improved the lower bound on the kissing number in 11 dimensions (finding 593 spheres, up from 592), and found new best-known constructions for packing unit hexagons within a larger hexagon, minimizing the ratio of maximum to minimum distance for points in 2D and 3D space, and for variants of the Heilbronn problem (maximizing the smallest triangle area).

How does AlphaEvolve ensure the correctness of the code it generates?

AlphaEvolve addresses the challenge of potential incorrect suggestions from base LLMs by grounding its evolution process in code execution and automatic evaluation. This means that proposed changes are executed, and their performance is automatically evaluated against predefined metrics. For mathematical problems, this might involve checking properties and returning a score; for engineering problems, it could involve running the code and measuring actual runtime or resource consumption. This continuous feedback loop allows AlphaEvolve to identify and discard incorrect solutions, ensuring functional correctness.

What is the underlying mechanism of AlphaEvolve's iterative improvement process?

AlphaEvolve operates through a distributed controller loop. It samples existing "parent" programs from a database, uses a prompt sampler to build a rich context prompt, and then an LLM generates a "diff" (proposed changes) to the parent program. This diff is then applied to create a "child program," which is subsequently executed by an evaluator. The results of this evaluation are added back to the database, allowing AlphaEvolve to continuously learn from and build upon previously generated programs, effectively "hill-climbing" towards better solutions.

What role do LLMs play in AlphaEvolve's evolutionary process?

LLMs are central to AlphaEvolve, acting as intelligent code generators, critics, and evolvers. They receive problem descriptions, current program code, and performance feedback, then generate new code modifications. The ability of AlphaEvolve to leverage powerful LLMs with large context windows, providing them with rich context and feedback in prompts, is crucial for its ability to generate high-quality, non-trivial changes across various components of an algorithm, such as optimizers, loss functions, and hyperparameter sweeps.

What are the key components identified as crucial for AlphaEvolve's success through ablation studies?

Ablation studies revealed the importance of two core components for AlphaEvolve's efficacy:

Evolutionary Approach: The ability to store and use previously generated programs to inform subsequent iterations (the "evolutionary" aspect) significantly outperforms approaches that repeatedly feed the same initial program to the LLM ("No evolution").

Context in Prompts: Providing problem-specific context in the prompts given to the LLM dramatically improves performance compared to providing no explicit context. This highlights the importance of leveraging the large context windows of powerful LLMs.

Table of Contents with Timestamps

00:00 - Introduction and Theme Welcome to Heliox, setting the stage for deep exploration of evidence-based topics

00:25 - Episode Introduction

Introducing AlphaEvolve research from Google DeepMind and the concept of AI discovering new algorithms

01:23 - Understanding AlphaEvolve Exploring the evolutionary coding agent concept and how it differs from traditional AI approaches

02:00 - The Evolutionary Mechanism How AlphaEvolve uses iterative improvement, testing, and refinement cycles

03:00 - System Architecture and Pipeline Breaking down the components: program database, prompt samplers, LLM ensemble, and evaluators

04:50 - Constraining the Search Space How users define which code sections can be evolved using special comment tags

06:00 - Mathematical Breakthroughs AlphaEvolve's achievement in matrix multiplication and breaking a 56-year-old record

08:00 - Diverse Mathematical Applications Success across 50+ open problems in combinatorics, geometry, and number theory

10:45 - Collaboration with Human Experts Partnership approach with mathematicians like Terence Tao

11:40 - Real-World Impact at Google Practical applications in data center scheduling and infrastructure optimization

13:27 - Gemini Kernel Engineering Optimizing low-level code for training large language models

14:40 - Hardware Circuit Design Contributions to TPU chip design and register transfer level optimization

16:15 - Compiler Optimization Improving already-optimized code beyond what traditional compilers achieve

17:00 - Broader Implications Why these seemingly abstract improvements matter for everyday technology

18:00 - System Synergy and Limitations The importance of combining all components and the need for automated evaluation

19:20 - Future Possibilities Potential integration into scientific workflows and AI development

20:25 - Closing Thoughts Final reflections on boundary dissolution, adaptive complexity, and emerging frameworks

Index with Timestamps

AI systems, 00:25, 17:58

Algorithms, 00:25, 06:58, 17:27

AlphaEvolve, 00:25, 01:23, 04:50

Automated evaluation, 02:44, 03:16, 06:00

Bin packing, 11:57, 12:00

Circuit design, 14:42, 15:11

Code evolution, 01:46, 02:00, 19:39

Combinatorics, 09:11, 09:29

Compiler optimization, 15:32, 16:07

Computational efficiency, 17:25, 17:43

Data center scheduling, 11:42, 12:17

DeepMind, 00:25

Erdos minimum overlap, 09:29

Evolutionary approach, 01:58, 02:44

Fleet optimization, 12:38, 12:55

Gemini training, 13:29, 14:01

Geometry problems, 09:11, 09:36

Google infrastructure, 11:42, 12:17

Hardware optimization, 14:42, 15:06

Heilbronn problem, 10:14

Heuristic functions, 12:17, 14:00

Kissing numbers, 09:36, 09:43

Large language models, 01:33, 04:01

Mathematical breakthroughs, 06:58, 08:00

Matrix multiplication, 07:09, 07:30

Number theory, 09:11

Packing problems, 09:57, 10:02

Performance optimization, 13:55, 16:02

Scientific discovery, 00:25, 18:05

Strassen algorithm, 07:43, 07:52

Tao, Terence, 10:58, 11:00

TPU optimization, 13:40, 14:42

Vector bin packing, 11:57

Poll

Post-Episode Fact Check

Fact Check: AlphaEvolve Episode

✅ VERIFIED CLAIMS

AlphaEvolve System Existence

Claim: Google DeepMind developed AlphaEvolve as an evolutionary coding agent

Status: ✅ CONFIRMED - Research paper published by Google DeepMind

Matrix Multiplication Breakthrough

Claim: AlphaEvolve discovered algorithm requiring only 48 scalar multiplications for 4x4 complex matrices

Claim: This broke a 56-year-old record from Strassen's 1969 algorithm

Status: ✅ CONFIRMED - Documented in the research paper

Mathematical Problem Solving

Claim: Applied to over 50 open mathematical problems

Claim: Surpassed state-of-the-art on approximately 20% of problems tackled

Status: ✅ CONFIRMED - Specific results documented

Kissing Numbers Problem

Claim: Improved 11-dimensional bound from 592 to 593 spheres

Status: ✅ CONFIRMED - Specific improvement cited in research

✅ GOOGLE INFRASTRUCTURE CLAIMS

Data Center Optimization

Claim: Recovered 0.7% of Google's fleet-wide compute resources

Status: ✅ CONFIRMED - Real deployment results reported

Gemini Training Optimization

Claim: 23% speedup on specific kernels, 1% reduction in overall training time

Status: ✅ CONFIRMED - Performance metrics documented

TPU Circuit Design

Claim: Optimized register transfer level (RTL) code for TPU arithmetic circuits

Status: ✅ CONFIRMED - Validated by TPU design team

⚠️ CONTEXT NEEDED

Terence Tao Collaboration

Claim: Collaboration with mathematician Terence Tao mentioned

Status: ⚠️ PARTIAL - Paper mentions collaborations with mathematicians but doesn't specify Tao's direct involvement in all cited examples

Timeline Precision

Claim: Various optimization timeframes (months to days)

Status: ⚠️ GENERAL - Specific timeframes may vary by problem complexity

✅ TECHNICAL ACCURACY

Evolutionary Algorithm Approach

Status: ✅ ACCURATE - System uses iterative code evolution with evaluation feedback

LLM Integration

Claim: Uses ensemble of Gemini 2.0 Flash and Pro models

Status: ✅ CONFIRMED - Technical architecture documented

Evaluation Requirements

Claim: Requires automated evaluation functions

Status: ✅ ACCURATE - Fundamental system requirement

📊 SCALE VERIFICATION

Google Fleet Impact

0.7% resource recovery = tens of thousands of machines worth of capacity

Status: ✅ MATHEMATICALLY SOUND given Google's known infrastructure scale

Problem Scope

Covers combinatorics, geometry, number theory, analysis

Status: ✅ CONFIRMED - Broad mathematical domain coverage documented

🔬 SCIENTIFIC RIGOR

Peer Review Status

Status: ✅ Research published by Google DeepMind with standard scientific methodology

Reproducibility

Status: ⚠️ LIMITED - Some results may require Google-scale infrastructure to fully reproduce

OVERALL ASSESSMENT: ✅ HIGHLY ACCURATE

The episode accurately represents the AlphaEvolve research with proper context and appropriate caveats about system limitations and requirements.

Image (3000 x 3000 pixels)

Mind Map