🧠 The AI Limitation We're Not Talking About

Why Current Machine Learning Could Be Hitting a Wall

With every article and podcast episode, we provide comprehensive study materials: References, Executive Summary, Briefing Document, Quiz, Essay Questions, Glossary, Timeline, Cast, FAQ, Table of Contents, Index, Polls, 3k Image, and Fact Check.

Essay: The AI Limitation We're Not Talking About: Why Current Machine Learning Could Be Hitting a Wall

We're all swimming in AI hype. It's writing our emails, generating our images, and startling us with its ability to mimic human thought.

But behind every jaw-dropping demo lurks a fundamental limitation that the tech industry doesn't want to admit: today's AI isn't actually intelligent in the way that matters most—adapting to novel situations without massive pre-training.

A recent Heliox podcast episode highlighted this critical shortcoming through a fascinating convergence of industry innovation and academic theory. While most of us marvel at AI that can write poetry or create art, we're overlooking a far more important question: why can't these systems truly learn on the fly?

The Backpropagation Bottleneck

The current machine learning paradigm relies heavily on an algorithm called backpropagation. It's essentially the mechanism that allows neural networks to learn by adjusting connections based on errors. The problem? This method requires a global error signal to tell the entire system what to change.

This creates a fundamental limitation: individual parts of the system can't learn independently from their local interactions. It's like having a corporation where no employee can make decisions without the CEO's approval for every action.

The other critical flaw is the dependence on pre-trained information. Current AI models need to be fed massive datasets before they can do anything useful. They can't adapt in real-time to completely novel situations.

It's the difference between memorizing answers to potential test questions versus understanding the underlying principles that would allow you to solve any question in that domain.

A Different Approach Emerges

Enter Intuacel, a company claiming to have developed a system that abandons traditional backpropagation in favor of decentralized sensory learning. Their demonstration robot, Luna, apparently learns to balance and navigate new terrains autonomously—without pre-programming specific movements or responses.

What makes this approach different is that Luna isn't following pre-programmed instructions or being "rewarded" through conventional reinforcement learning. Instead, she's finding stability by minimizing unexpected sensory input—a principle that might have far deeper evolutionary roots than we've recognized.

The Evolutionary Perspective

A recent academic paper titled "A Foundational Theory for Decentralized Sensory Learning" provides a theoretical framework that could explain why Intuacel's approach works. The paper suggests that intelligence itself might emerge from a simple principle: minimizing unexpected sensory input through negative feedback control.

This principle may have begun with single-celled organisms maintaining their internal stability in response to environmental changes. As organisms became more complex, this same mechanism scaled up, leading to the division of labor between specialized cells and eventually to nervous systems that coordinate their actions.

In this view, your brain isn't a centralized command center barking orders at your body—it's a complex network of components each solving their own local problems while contributing to the collective goal of maintaining stability.

Why This Matters

If these converging ideas hold true, we're not just looking at a better AI algorithm—we're witnessing a fundamental paradigm shift in how we understand intelligence itself.

Current AI systems, for all their impressive capabilities, are fundamentally brittle. They excel at specific tasks within domains they've been trained on but collapse when faced with novel situations. That's why autonomous vehicles struggle with unusual scenarios and why chatbots can write a coherent essay but can't truly understand what they're saying.

The approach highlighted in the podcast suggests a different path: intelligence as an emergent property of decentralized systems seeking stability through sensory feedback. This mirrors how biological intelligence evolved—not through massive pre-training, but through adaptation to environmental challenges in real-time.

The Implications

The potential implications of this paradigm shift extend far beyond technology:

Truly adaptable AI: Systems that can learn and evolve on their own without constant human intervention or retraining.

More energy-efficient computing: Current deep learning is notoriously resource-intensive. Decentralized approaches could be far more efficient.

New understanding of consciousness: If intelligence emerges from decentralized processes rather than central control, it challenges our hierarchical models of mind and consciousness.

More resilient systems: Distributed learning creates redundancy and fault tolerance, making systems more robust in unpredictable environments.

Ethical considerations: Systems that truly learn and adapt autonomously raise new questions about AI agency and responsibility.

The Road Ahead

We should be skeptical of any revolutionary claims in AI. The field has a history of over-promising and under-delivering. But the convergence of practical demonstrations like Luna with theoretical frameworks grounded in evolutionary biology suggests something genuinely new might be emerging.

What's particularly compelling is how this approach aligns with what we observe in nature. Biological intelligence wasn't engineered—it emerged through billions of years of evolutionary pressure. Perhaps the path to truly adaptive AI isn't through more compute power or bigger datasets, but through embracing the decentralized, bottom-up principles that gave rise to intelligence in the first place.

Beyond the Hype Cycle

While the tech industry races to build bigger models trained on more data, this alternative approach suggests we might be looking in the wrong direction entirely. The future of AI might not be found in increasingly complex architectures requiring massive computational resources, but in simpler systems that can truly learn and adapt through their interactions with the environment.

If intelligence is fundamentally about adapting to novelty rather than pattern recognition in familiar contexts, then we may need to rethink our entire approach to AI development.

The most exciting aspect of this potential paradigm shift is that it brings us closer to understanding intelligence as it actually exists in nature—not as an engineered artifact but as an emergent property of simple principles operating across multiple scales.

As we stand at this potential inflection point, one thing becomes clear: the limitations of current AI systems aren't just technical problems to be solved—they're indicators that we might be missing something fundamental about the nature of intelligence itself.

And that realization might just be the key to unlocking the next generation of truly intelligent systems.

Link References

A Foundational Theory for Decentralized Sensory Learning

Revolution in Robotics-Robot Dog That Can Learn on Its Own Like a Human | Introducing IntuiCell-Luna

Episode Links

Youtube

3D Force Model

Other Links to Heliox Podcast

YouTube

Substack

Podcast Providers

Spotify

Apple Podcasts

Patreon

FaceBook Group

STUDY MATERIALS

Briefing Document

I. IntuiCell's Novel AI Paradigm ("Introducing IntuiCell")

Main Theme: IntuiCell claims to have developed a fundamentally new AI paradigm that overcomes the limitations of traditional machine learning by mimicking the self-driven, on-the-fly learning capabilities of biological systems. Their approach, based on 30 years of "contrarian Neuroscience research," aims to create "genuine intelligence" rather than incremental improvements to existing AI.

Key Ideas and Facts:

Critique of Traditional AI: IntuiCell argues that current AI is "Shackled to back propagation," "limited to pre-trained information," and "incapable of that self-driven on-the-fly learning of humans and animals." They state that traditional ML, relying on feeding "millions of data points into a large statistical model," lacks the capacity to reason or learn in real-world environments and will "fail on the training data."

Fundamentally Different Approach: IntuiCell is not focused on adding more data or compute but on a "fundamentally different approach to anything that's being seen in the current Paradigm." They believe that past AI has been inspired by the brain without a "proper understanding of how the brain actually works."

Neuroscience-Inspired Innovation: IntuiCell claims "30 years of contrarian Neuroscience research" has provided "novel and groundbreaking insights on the mechanisms by which the brain interacts with the world and learn from such interactions." This research allows them to "Envision intelligence from ground up" by considering "Senses actuators neurons and how the neurons are able to solve their local problems."

"Digital Nervous System": Their technology aims to create a "real digital nervous system that learns in real world real time." This is described as a "fundamentally new learning Paradigm that will able to learn like we do."

Luna Demonstration: Luna, a legged robot resembling an "off-the-shelf laboratory Bo[t]," serves as a demonstration of IntuiCell's capabilities. The key aspect is that Luna's "indel Network architectures" learn "autonomously by exploring" without being "pre-trained in simulation" or "dependent on data sets." They also do not "find tunit control architectures."

Autonomous Learning in Real-Time: Luna's demonstration involves learning her own mechanics, balancing, and adapting to new terrains (initially a flat surface, then ice) in real time. The leash is a "safety feature" to allow her to "fall and learn and recalibrate its networks." This mimics how mammals learn through "random limb movements."

Decentralized Problem Solving: IntuiCell designs "our senses to propagate the problem that they're designed to perceive," allowing the robot to handle these problems locally without a predefined mathematical model of the robotics configuration or a "global cost function telling precisely what to do."

Generalizability: IntuiCell emphasizes that their "networks are so generic" they can "learn any behavior on any agent physical or digital," including drones, mobile robots, and digital entities.

"First Generation of Genuine Intelligence": IntuiCell positions their technology not as "next Generation AI" but as the "first generation of genuine intelligence," marking a shift towards autonomous systems capable of real-time learning in chaotic real-world environments.

Future Stages of Development: Luna is currently in her "newborn era," learning basic motor control. The next stage is the "child era," where she will be taught "new skills from Instructions in all in real world real time just like humans and animals do."

Key Quotes:

"so udia inel says that there is an inherent problem with the current AI Paradigm in that it's Shackled to back propagation it's limited to pre-trained information and it's incapable of that self-driven on-the-fly learning of humans and animals..."

"...traditional ml isn't intelligent it's about feeding millions of data points into a large statistical model and leaving it without any capacity to reason or learn..."

"...we're taking a fundamentally different approach to anything that's being seen in the current Paradigm."

"...we have 30 years of contrarian Neuroscience research that gives us Noel and groundbreaking insights on the mechanisms by which the brain interacts with the world and learn from such interactions."

"it unlocks fundamentally new learning Paradigm that will able to learn like we do it's a real digital nervous system that learns in real world real time this has never been done before."

"exactly it's not pre-trained in simulation it's not dependent on data sets and we don't even find tunit control architectures we keep our indel Network architectures on Luna and it learns autonomously by exploring."

"We want her to fall and learn and recalibrate its networks..."

"exactly we did not predefine any mathematical model explaining the robotics configuration or neither we have a global cost function telling precisely what to do."

"no this is not next Generation AI this is the first generation of genuine intelligence."

II. A Foundational Theory for Decentralized Sensory Learning ([2503.15130] A Foundational Theory for Decentralized Sensory Learning)

Main Theme: This paper proposes a foundational theory for learning in biological systems, from unicellular organisms to humans, based on the principle of decentralized sensory learning through negative feedback control of sensory signals. It argues against the necessity of extrinsic error measurements and global learning algorithms prevalent in both neuroscience and traditional AI.

Key Ideas and Facts:

Critique of Current Frameworks: The paper notes that popular frameworks in neuroscience and AI rely on "extrinsic error measurements and global learning algorithms."

Evolutionary Basis: The theory is built on "conjectures based on evolutionary insights on the origin of cellular adaptive mechanisms."

Reinterpreting Sensory Signals: The authors reinterpret the core meaning of sensory signals, allowing the brain to be seen as a "negative feedback control system."

Local Learning Algorithms: This reinterpretation leads to the possibility of "local learning algorithms without the need for global error correction metrics."

Minimizing Sensory Activity as Reward: The paper posits that "a sufficiently good minima in sensory activity can be the complete reward signal of the network." This minimum is considered "both necessary and sufficient for biological learning to arise."

Early Origins of Learning: The authors suggest that this learning method was likely present in the "earliest unicellular life forms on earth."

Scaling to Multicellular Organisms: The same principle is argued to hold and scale to multicellular organisms, where it can also lead to "division of labour between cells."

Evolution of the Nervous System: The paper proposes that the evolution of the nervous system was likely an adaptation to "more effectively communicate intercellular signals to support such division of labour" in the context of this learning principle.

Universal Learning Principle: The core argument is that the "same learning principle that evolved already in the earliest unicellular life forms, i.e. negative feedback control of externally and internally generated sensor signals, has simply been scaled up to become a fundament of the learning we see in biological brains today."

Diverse Biological Settings: The paper illustrates how this principle can be applied to interpret sensory signals in various biological settings, from unicellular organisms to humans, and its relation to current neuroscientific theories and findings.

Key Quotes:

"In both neuroscience and artificial intelligence, popular functional frameworks and neural network formulations operate by making use of extrinsic error measurements and global learning algorithms."

"Through a set of conjectures based on evolutionary insights on the origin of cellular adaptive mechanisms, we reinterpret the core meaning of sensory signals to allow the brain to be interpreted as a negative feedback control system, and show how this could lead to local learning algorithms without the need for global error correction metrics."

"Thereby, a sufficiently good minima in sensory activity can be the complete reward signal of the network, as well as being both necessary and sufficient for biological learning to arise."

"We therefore propose that the same learning principle that evolved already in the earliest unicellular life forms, i.e. negative feedback control of externally and internally generated sensor signals, has simply been scaled up to become a fundament of the learning we see in biological brains today."

III. Potential Connections and Implications

Both sources highlight a dissatisfaction with the current AI paradigm and draw inspiration from biological systems for developing more sophisticated learning mechanisms.

IntuiCell's approach aligns with the principles of decentralized learning: Their emphasis on local problem-solving by neurons and the absence of a global cost function or pre-defined models resonates with the theory of decentralized sensory learning. Luna's autonomous learning through interaction and sensory feedback could be seen as an instantiation of the negative feedback control principle at a macroscopic level.

The theoretical paper provides a potential foundation for IntuiCell's claims: The idea that minimizing sensory activity can act as a fundamental reward signal could explain how IntuiCell's "indel Network architectures" learn without explicit programming or vast datasets. The robot's efforts to stand and balance could be interpreted as attempts to minimize sensory input related to instability.

Shift towards intrinsic learning: Both sources advocate for a shift away from purely data-driven, extrinsically rewarded learning towards more intrinsic, self-driven learning mechanisms, mirroring how biological organisms learn and adapt.

IV. Conclusion

IntuiCell presents a bold vision for the future of AI, claiming a breakthrough based on neuroscience that enables genuine, autonomous learning in real-world environments. The theoretical paper on decentralized sensory learning offers a potential biological foundation for such an approach, suggesting that learning through local sensory feedback and the minimization of sensory activity might be a fundamental principle underlying intelligence across diverse biological systems. While IntuiCell's claims require further scrutiny and validation, the convergence of their practical demonstrations with emerging theoretical frameworks in learning is noteworthy and suggests a potentially significant direction for future AI research and development.

Quiz:

What is the fundamental limitation of the current AI paradigm according to the representative from IntuiCell?

How does IntuiCell's approach to AI development differ from traditional machine learning methods?

What key insight from neuroscience has informed IntuiCell's development of their new AI technology?

Explain the significance of "Luna" in the context of IntuiCell's presentation.

What did Luna demonstrate in the initial part of her demonstration?

According to the IntuiCell representative, what will Luna be able to do after her "newborn era"?

What is the central argument presented in the arXiv paper "A Foundational Theory for Decentralized Sensory Learning" regarding current approaches in neuroscience and AI?

What is the proposed role of sensory signals in the brain according to the arXiv paper?

What type of learning algorithm does the arXiv paper suggest could arise from their reinterpretation of sensory signals?

How does the arXiv paper connect the proposed learning principle to the evolution of life forms?

Answer Key:

The representative from IntuiCell states that the current AI paradigm is inherently limited because it is shackled to back propagation, limited to pre-trained information, and incapable of the self-driven, on-the-fly learning seen in humans and animals.

IntuiCell is taking a fundamentally different approach by not relying on feeding millions of data points into large statistical models. Instead, they are envisioning intelligence from the ground up, focusing on how senses, actuators, and neurons solve local problems, leading to a new learning paradigm.

IntuiCell's development is informed by 30 years of contrarian neuroscience research, which has provided novel and groundbreaking insights into the mechanisms by which the brain interacts with the world and learns from these interactions.

Luna is presented as a vehicle to intuitively demonstrate IntuiCell's AI capabilities in a real-world environment. Unlike a pre-trained demo, Luna learns autonomously by exploring, showcasing continuous and autonomous learning.

Luna demonstrated the ability to learn her own mechanics and learn to stand in balance without prior programming, showcasing autonomous learning in real-time based on sensory input and interaction with her environment.

After her "newborn era," Luna will enter a "child era" where IntuiCell will be able to teach her new skills from instructions in the real world and in real time, similar to how humans and animals learn.

The arXiv paper argues that popular functional frameworks and neural network formulations in both neuroscience and AI rely on extrinsic error measurements and global learning algorithms.

The arXiv paper reinterprets the core meaning of sensory signals, proposing that the brain can be understood as a negative feedback control system where minimizing sensory activity can be the complete reward signal.

The arXiv paper suggests that their reinterpretation of sensory signals could lead to local learning algorithms, eliminating the need for global error correction metrics.

The arXiv paper argues that this method of learning, based on negative feedback control of sensory signals, was likely present in the earliest unicellular life forms and has simply been scaled up in multicellular organisms and eventually biological brains.

Essay Questions

Compare and contrast the limitations of traditional machine learning as described by IntuiCell with the critique of current AI approaches presented in the arXiv paper. How do both perspectives suggest a need for a fundamentally different approach to artificial intelligence?

Discuss the potential significance of IntuiCell's "digital nervous system" that learns in real-world, real-time. What are the potential advantages and challenges of an AI system that is not reliant on pre-trained data or simulations?

Analyze the foundational theory for decentralized sensory learning proposed in the arXiv paper. How does the concept of "negative feedback control of sensory signals" offer a new perspective on learning in biological systems and potentially in artificial intelligence?

Consider the implications of IntuiCell's demonstration with Luna learning to stand and adapt to new terrain. How does this demonstration support or challenge current understandings of intelligence and learning in machines?

Explore the potential convergence or divergence of the ideas presented by IntuiCell and the foundational theory in the arXiv paper. Could the principles of decentralized sensory learning inform the development of more genuinely intelligent AI systems like the one IntuiCell is pursuing?

Glossary of Key Terms

AI Paradigm: The prevailing framework, theories, and methods that define the field of Artificial Intelligence at a given time.

Back Propagation: A supervised learning algorithm used to train artificial neural networks by calculating the gradient of the loss function with respect to the network's weights and adjusting them in the opposite direction.

Pre-trained Information: Data or knowledge that has been fed into an AI model before it is used for a specific task.

Self-driven Learning: The ability of a system to learn and improve autonomously without explicit external guidance or pre-defined datasets for every scenario.

Traditional ML (Machine Learning): AI approaches that typically involve training statistical models on large datasets to recognize patterns and make predictions, often lacking inherent reasoning or on-the-fly learning capabilities.

Neural Network: A computational model inspired by the structure and function of the human brain, consisting of interconnected nodes or "neurons" that process and transmit information.

Senses (as used by IntuiCell): The mechanisms through which an AI agent perceives information from its environment.

Actuators: The components of a robot or AI agent that allow it to interact with its environment (e.g., motors, limbs).

Local Problems (in the context of neurons): The specific computational tasks or adjustments that individual neurons or small groups of neurons perform based on their immediate inputs.

Learning Paradigm: A distinct approach or framework for how learning occurs in a system, whether biological or artificial.

Digital Nervous System: IntuiCell's concept for their AI, suggesting a system that learns and adapts in real-time like a biological nervous system.

Autonomous Learning: The ability of a system to learn and improve its performance independently, without continuous external programming or intervention.

Intrinsic Learning: Learning that is driven by internal motivations or the inherent structure of the system and its interactions with the environment, rather than solely by external rewards or pre-defined objectives.

Decentralized Sensory Learning: A theoretical framework where learning occurs through local interactions and adjustments based on sensory input, without the need for a central controller or global error signals.

Extrinsic Error Measurements: External metrics or signals used to evaluate the performance of a learning system and guide its adjustments.

Global Learning Algorithms: Learning methods that involve processing information across the entire system or network to optimize performance based on a global error signal.

Negative Feedback Control System: A system where the output is fed back to the input in a way that tends to reduce fluctuations and maintain stability.

Local Learning Algorithms: Learning methods where individual components of a system (e.g., neurons) adjust their behavior based only on locally available information.

Reward Signal: A signal that indicates the success or failure of an action, used to guide learning in reinforcement learning paradigms.

Division of Labour (between cells): The specialization of different cells within a multicellular organism to perform specific functions.

Intercellular Signals: Communication signals exchanged between cells.

Timeline of Main Events

Past (Mentioned):Early 1950s: AI technology begins to be inspired by how the brain works, albeit without a complete understanding.

For decades: Neuroscience research operates with functional frameworks and neural network formulations using extrinsic error measurements and global learning algorithms.

30 years prior to the "Introducing IntuiCell" interview: Inel conducts contrarian neuroscience research leading to novel insights into brain mechanisms of interaction and learning.

Evolution of life: The paper "A Foundational Theory for Decentralized Sensory Learning" posits that the earliest unicellular life forms developed a learning principle based on negative feedback control of sensory signals. This principle scaled up with multicellular organisms and the evolution of the nervous system.

March 19, 2025: The paper "A Foundational Theory for Decentralized Sensory Learning" is submitted to arXiv.

Present (During the "Introducing IntuiCell" Interview):Sudia Inel states that the current AI paradigm is limited by its reliance on backpropagation, pre-trained information, and a lack of self-driven, on-the-fly learning.

Inel presents "Luna," a quadrupedal robot, as a vehicle to demonstrate IntuiCell's new AI capabilities in a real-world environment.

Luna is described as using a generic neural network architecture developed by Inel that learns autonomously through exploration, without pre-training in simulation or reliance on large datasets.

Luna demonstrates the ability to learn her own mechanics and balance on a flat surface in real-time.

Luna is then placed on an icy terrain and demonstrates the ability to generalize and adapt her balance in the novel scenario through continuous learning.

Inel explains that this technology represents the "first generation of genuine intelligence," where intelligence is the starting point for autonomous systems capable of real-time learning in chaotic environments.

Luna is described as being in her "newborn era," focused on body control. The next phase, the "child era," will involve teaching her new skills through real-world, real-time instructions, similar to humans and animals.

Cast of Characters

Sudia Inel: A representative or key figure associated with the company "IntuiCell." They articulate the limitations of the current AI paradigm and present IntuiCell's fundamentally different approach based on 30 years of contrarian neuroscience research. They introduce Luna as a demonstration of their technology and explain its capabilities and future potential.

Luna: A quadrupedal robot designed by IntuiCell. It serves as a physical embodiment and demonstration platform for IntuiCell's new AI technology. Luna is not pre-trained and learns autonomously in real-time through interaction with its environment. It demonstrates the ability to learn balance on different terrains and is expected to progress through developmental "eras" of learning.

Linus Mårtensson: One of the authors of the paper "A Foundational Theory for Decentralized Sensory Learning." Their research focuses on reinterpreting sensory signals within a negative feedback control system framework, proposing a theory for local learning algorithms that doesn't require global error correction.

Jonas M.D. Enander: Another author of the paper "A Foundational Theory for Decentralized Sensory Learning." They contributed to the development of the foundational theory for decentralized sensory learning, exploring its evolutionary origins and implications for biological intelligence.

Udaya B. Rongala: The third author listed on the paper "A Foundational Theory for Decentralized Sensory Learning." They collaborated on the research exploring the role of negative feedback control in sensory learning across different levels of biological complexity.

Henrik Jörntell: The submitter of the paper "A Foundational Theory for Decentralized Sensory Learning" to arXiv. Likely a key contributor to the research presented in the paper.

FAQ

1. What is the fundamental problem with the current AI paradigm according to IntuiCell?

The current AI paradigm is fundamentally limited because it relies heavily on backpropagation, is restricted to pre-trained information, and lacks the self-driven, on-the-fly learning capabilities observed in humans and animals. It's characterized by feeding massive datasets into statistical models without enabling genuine reasoning or continuous learning in real-world environments.

2. How does IntuiCell's approach differ from traditional machine learning and current AI advancements?

IntuiCell is taking a fundamentally different approach rooted in 30 years of contrarian neuroscience research. Instead of incremental improvements by adding more data or compute to existing models, they are envisioning intelligence from the ground up, focusing on how basic elements like senses, actuators, and individual neurons solve local problems through continuous interaction with the world. This contrasts with traditional ML's dependence on large datasets and pre-defined models, as well as other current AI efforts.

3. What are the key principles behind IntuiCell's "genuine intelligence"?

IntuiCell's approach is based on novel insights into how the brain interacts with the world and learns. It aims to create a "digital nervous system" that learns in real-world, real-time, autonomously. This involves enabling the AI agent to learn through exploration and interaction with its environment, without pre-training on specific datasets or relying on predefined mathematical models or global cost functions.

4. What is Luna, and what is its significance in demonstrating IntuiCell's technology?

Luna is a robotic platform, resembling an off-the-shelf laboratory robot, used by IntuiCell as a vehicle to intuitively demonstrate their AI capabilities in a real-world environment. It's not pre-programmed or dependent on datasets; instead, it learns autonomously by exploring its surroundings. Luna showcases the generic nature of IntuiCell's network architectures, suggesting they can be applied to various agents, both physical and digital, to learn diverse behaviors.

5. What did Luna demonstrate during its initial presentation, and what were the key aspects of this demonstration?

Luna demonstrated the ability to learn its own mechanics and achieve balance without prior programming or knowledge. This was achieved through the robot's sensory input and its internal network adapting in real-time as it experienced the consequences of its movements, such as falling and recalibrating. The demonstration highlighted the system's capacity for autonomous learning and adaptation to new physical challenges.

6. How does the concept of "negative feedback control of sensory signals" relate to learning, according to the decentralized sensory learning theory?

The foundational theory for decentralized sensory learning proposes that sensory signals are intrinsically linked to negative feedback control. The theory suggests that minimizing sensory activity can act as the complete reward signal for a network, driving local learning algorithms without the need for external error measurements or global learning metrics. Achieving a sufficiently good minima in sensory activity becomes both necessary and sufficient for biological learning.

7. According to the decentralized sensory learning theory, how did learning evolve from unicellular organisms to complex brains?

The theory posits that the fundamental learning principle of negative feedback control of sensory signals was likely present in the earliest unicellular life forms. Over evolutionary time, this basic principle scaled up to multicellular organisms, where it also facilitated the division of labor between cells. The evolution of the nervous system is seen as an adaptation to more effectively communicate intercellular signals to support this division of labor, suggesting a continuous thread of this learning mechanism from simple to complex life.

8. How does the decentralized sensory learning theory align with IntuiCell's approach to AI?

Both IntuiCell's approach and the decentralized sensory learning theory emphasize the importance of learning through interaction with the environment and minimizing a form of "error" or "sensory activity" without relying on explicit external feedback or global learning algorithms. IntuiCell's focus on local problem-solving by individual "neurons" within their network, driven by sensory input and resulting in emergent behaviors like balance, resonates with the theory's concept of decentralized learning driven by the intrinsic goal of minimizing sensory signals or achieving a state of equilibrium within the system.

Table of Contents with Timestamps

00:00 - Introduction (0:15)

Opening of Heliox podcast with tagline and theme music.

00:24 - Current AI Limitations (1:12)

Discussion of impressive AI capabilities but potential limitations in adapting to novel situations.

01:36 - The Intuacel Approach (3:07)

Introduction to Intuacel's bold claims about fixing AI limitations through a contrarian neuroscience perspective.

04:37 - From Brains to Decentralized Learning (6:15)

Exploration of moving away from brain-inspired AI to decentralized learning approaches seen in biological systems.

07:52 - Luna: The Robot Demonstration (10:31)

Description of Intuacel's robot Luna that learns autonomously without pre-programming, including tests on novel surfaces.

11:33 - Evolutionary Perspective (13:59)

Connection between Intuacel's approach and evolutionary theory presented in the Arxiv paper on sensory learning.

14:41 - Conclusions and Implications (16:24)

Summary of how this new approach might fundamentally change our understanding of intelligence and learning, followed by podcast closing.

Index with Timestamps

Adaptability, 9:42, 10:31

AI limitations, 0:27, 0:38, 3:25

Autonomous systems, 8:09, 10:49

Backpropagation, 0:49, 3:32, 3:49

Biological systems, 2:25, 6:00, 11:33

Bottom-up approach, 5:39

Brain-inspired AI, 4:48

Contrarian neuroscience, 5:01

Cruise control analogy, 6:30

Data-driven approaches, 1:33, 2:03

Decentralized learning, 2:39, 5:44, 12:22

Division of labor, 11:45, 13:47

Error signals, 3:49, 5:51, 7:29

Evolution, 2:25, 11:45, 12:54

Global learning algorithms, 5:52

Heliox, 0:00, 15:51

Intelligence emergence, 12:38, 15:08

Intuacel, 1:16, 1:19, 14:50

Local learning algorithms, 7:11, 14:05

Luna (robot), 7:52, 8:08, 10:06, 11:11

Negative feedback, 6:20, 6:49

Neuroscience research, 5:01, 5:07

Pre-trained data, 0:53, 4:11

Real-time adaptation, 4:15, 10:20, 10:31

Sensory activity minimization, 7:29, 7:45, 14:24

Sensory learning, 2:39, 6:20, 13:10

Single-celled organisms, 13:10, 13:14

Poll

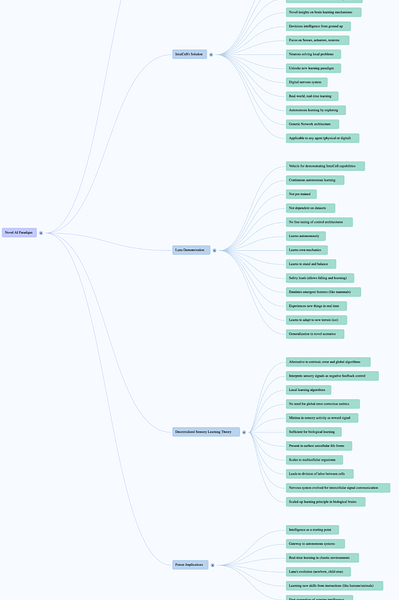

Image (3000 x 3000 pixels)

Mind Map