⚛️ When Physics Becomes the Algorithm

What Quantum AI Means for the Rest of Us

Entangled whispers—

minds linked beyond space, beyond words.

Physics learns to trust.

With every article and podcast episode, we provide comprehensive study materials: References, Executive Summary, Briefing Document, Quiz, Essay Questions, Glossary, Timeline, Cast, FAQ, Table of Contents, Index, Polls, 3k Image, Fact Check, Comic and

Street Art at the very bottom of the page.

Soundbite

Essay

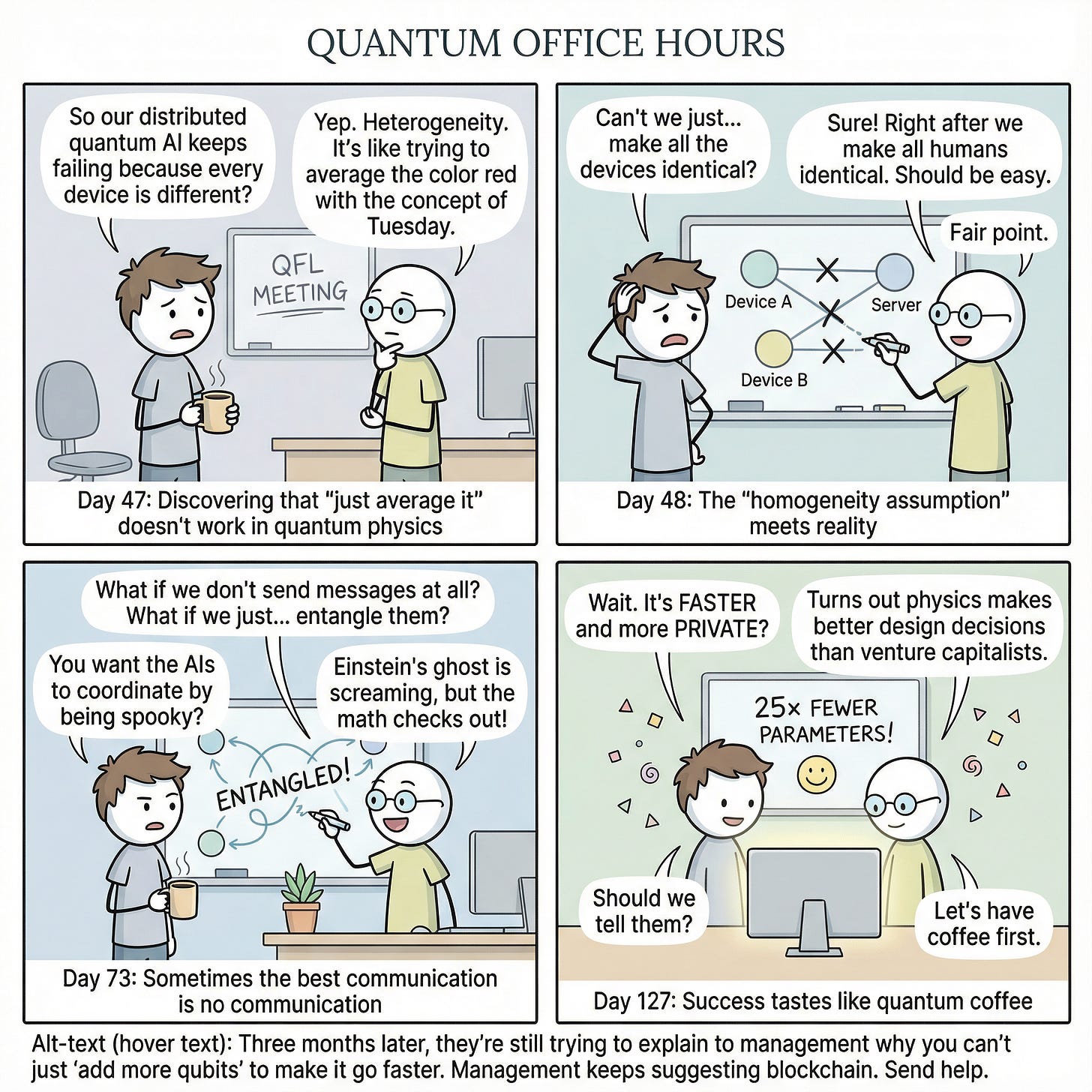

We live in a world where technology promises to solve everything, yet somehow makes everything more complicated. Every few years, we’re told about the next revolutionary breakthrough—blockchain, the metaverse, whatever buzzword venture capitalists are currently salivating over. Most of these revolutions turn out to be expensive ways to do things we were already doing, just with more energy consumption and investor presentations.

But occasionally, something genuinely different emerges. Something that doesn’t just optimize existing systems but fundamentally reimagines how systems could work. The convergence of quantum computing and artificial intelligence might actually be one of those rare moments. And unlike most technological revolutions that promise to “disrupt” our lives (usually code for “make things worse while extracting more value”), this one hints at solving problems we’ve created with our previous solutions.

Here’s the uncomfortable truth about modern AI: it’s built on a foundation of centralization and surveillance. When we talk about machine learning systems getting smarter, we’re really talking about massive corporations hoovering up unimaginable amounts of data, funneling it into centralized servers, and processing it in ways that make our privacy concerns look quaint. The computational power is impressive. The ethical framework is horrifying.

The researchers whose work forms the basis of this deep dive—Ratun Rahman, Dinsin Grian, Christo Karisimutal Thomas, Alexander Daru, and Waleed Saad—weren’t trying to build a better surveillance machine. They were trying to solve a genuinely thorny problem: how do you create AI systems that are both powerful and respectful of privacy? How do you enable collaboration without coercion? How do you build intelligence that doesn’t require everyone to surrender their data to a central authority?

Their answer involves something called Quantum Federated Learning, and it’s worth understanding because it represents a different philosophy of how technology could work.

The Privacy Problem We’ve Normalized

We’ve become so accustomed to the surveillance economy that we barely notice it anymore. Every app, every device, every “smart” system operates on the assumption that your data belongs to whoever can collect it. Want better recommendations? Hand over your browsing history. Want personalized healthcare? Upload your medical records. Want your city to run more efficiently? Let us track your every movement.

The classical approach to machine learning requires this centralization. You need massive datasets in one place to train effective models. But this creates what security experts call a “single point of failure”—a honeypot of valuable information just waiting to be breached, hacked, or subpoenaed.

Federated Learning was the first attempt to solve this. Instead of sending your data to a central server, the server sends a model to your device. Your device trains the model on your local data, then sends back only the updated model parameters—not the raw data itself. It’s a clever workaround, and it’s already being used by companies like Google for features like predictive text on your phone.

But there’s a problem. Classical Federated Learning still has limitations. The models can be reverse-engineered to expose private information. The communication overhead is massive. And perhaps most importantly, it doesn’t scale well to the truly complex problems we need AI to solve—problems in medicine, climate modeling, materials science, and beyond.

Enter the Quantum Weirdness

Quantum computing sounds like science fiction, and in many ways, it still is. But the core principles are real, and they’re genuinely strange. A quantum bit—a qubit—doesn’t have to be a zero or a one. It can be both simultaneously, in what’s called superposition. And multiple qubits can become “entangled,” linked in ways that transcend physical distance, sharing information instantaneously in what Einstein famously called “spooky action at a distance.”

These aren’t just interesting physics facts. They’re computational superpowers. Superposition allows quantum computers to explore multiple solutions simultaneously. Entanglement allows for correlations that classical systems simply cannot achieve. Together, they enable processing of complex, high-dimensional data in ways that would take classical computers millennia to accomplish.

But here’s where it gets interesting for those of us who care about building better systems: quantum mechanics might offer a way to do distributed AI that’s fundamentally more private and more efficient than anything classical computing can achieve.

The Breakthrough: Making It Work in the Real World

The researchers confronted a problem that most quantum computing work conveniently ignores: the real world is messy. Devices are different. Data is inconsistent. Quantum systems are incredibly fragile and prone to noise. The theoretical elegance of quantum algorithms falls apart when you try to deploy them across a network of diverse, imperfect devices.

This messiness—what they call “heterogeneity”—isn’t just a statistical problem. It’s a physics problem. When quantum states differ between devices, you can’t just average them like you would with classical data. They exist in incompatible mathematical spaces. It’s like trying to average the color red with the concept of justice—they’re fundamentally different kinds of information.

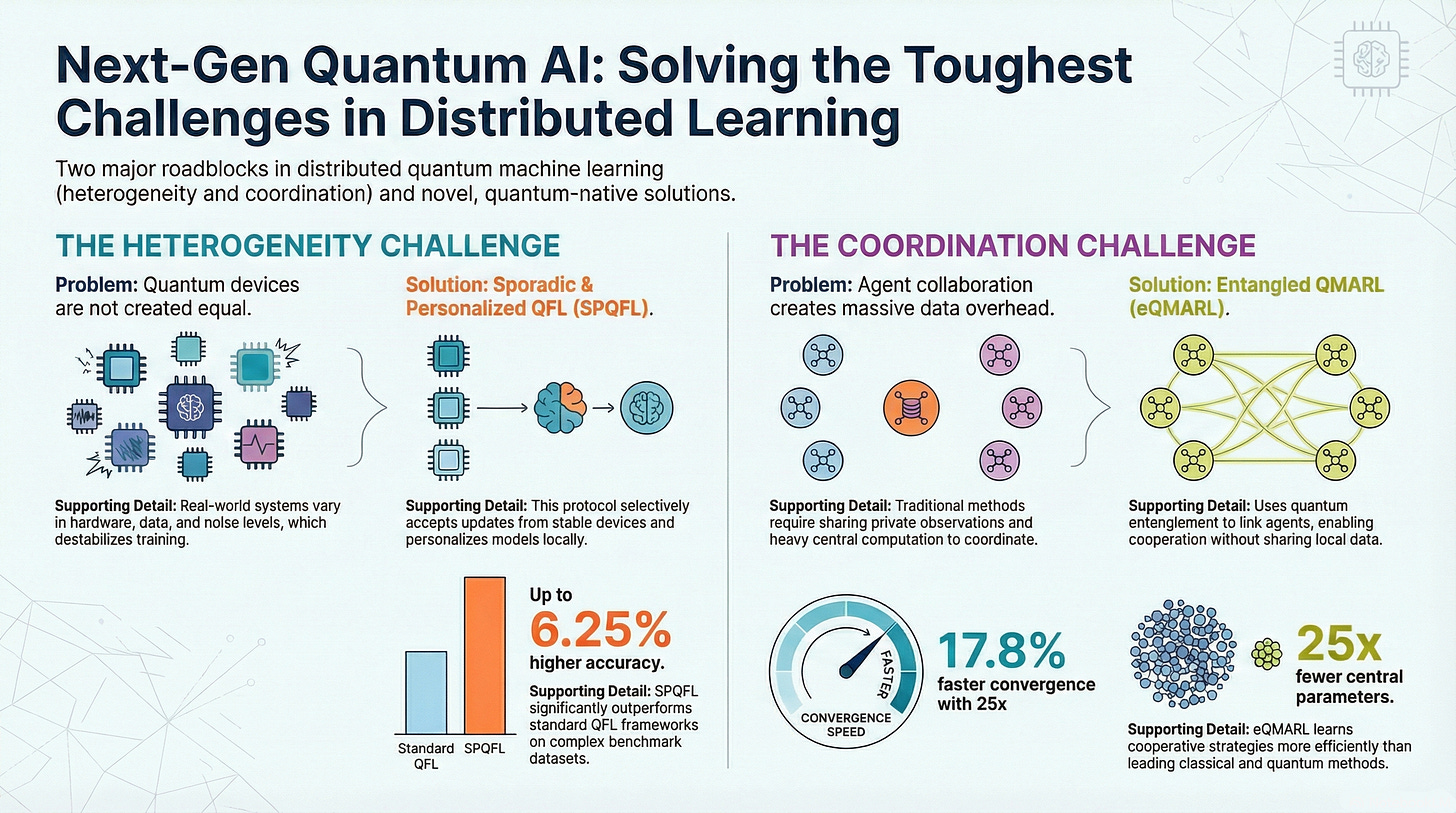

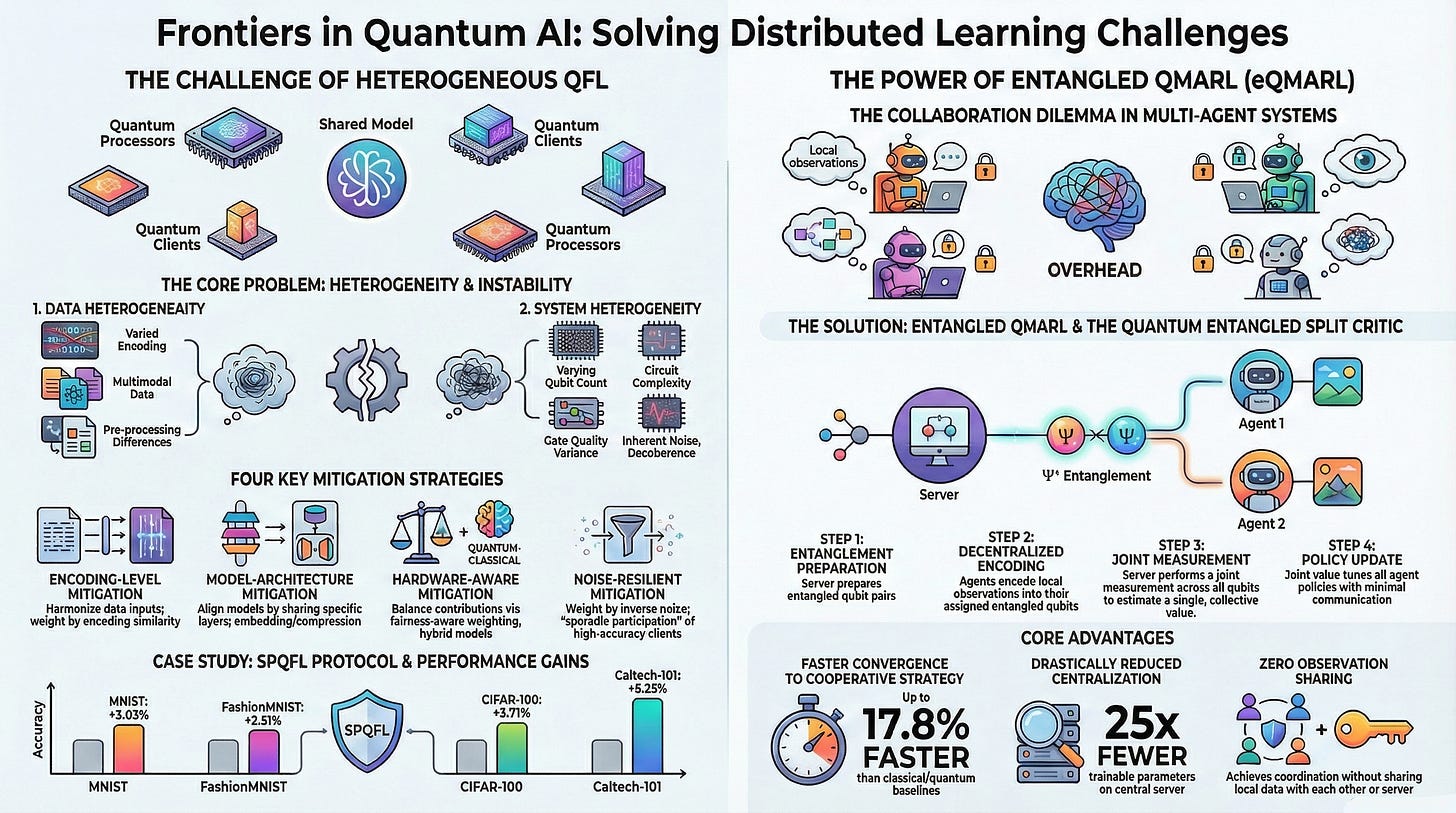

The solution they developed, called Sporadic Personalized Quantum Federated Learning (SPQFL), is elegant in its pragmatism. Instead of trying to force all devices to be identical, it accepts their differences and manages them intelligently. It allows devices to personalize their models for their local data while preventing them from drifting too far from the collective goal. And critically, it includes a quality gate: only devices that meet a certain performance threshold are allowed to contribute to the global model. Noisy, unreliable updates are filtered out automatically.

This isn’t just technical cleverness. It’s a different philosophy of collaboration—one that acknowledges diversity, manages differences, and maintains quality without centralized control.

From Infrastructure to Coordination: The Entanglement Revolution

But the really mind-bending innovation comes in how these researchers used quantum entanglement itself as a coordination mechanism for multi-agent AI systems.

Think about how robots or AI agents typically coordinate. They talk to each other. They share information. They send messages back and forth, consuming bandwidth, revealing their observations, and requiring a central coordinator to make sense of it all. It’s chatty, inefficient, and privacy-invasive.

The EQMRL (Entangled Quantum Multi-Agent Reinforcement Learning) framework does something radically different. Instead of having agents communicate explicitly, it uses quantum entanglement to create an implicit coordination channel. The agents’ decision-making processes are quantum-mechanically linked from the start. When one agent processes its local observation, that operation instantaneously influences the quantum state of the other agents—without any information being explicitly transmitted.

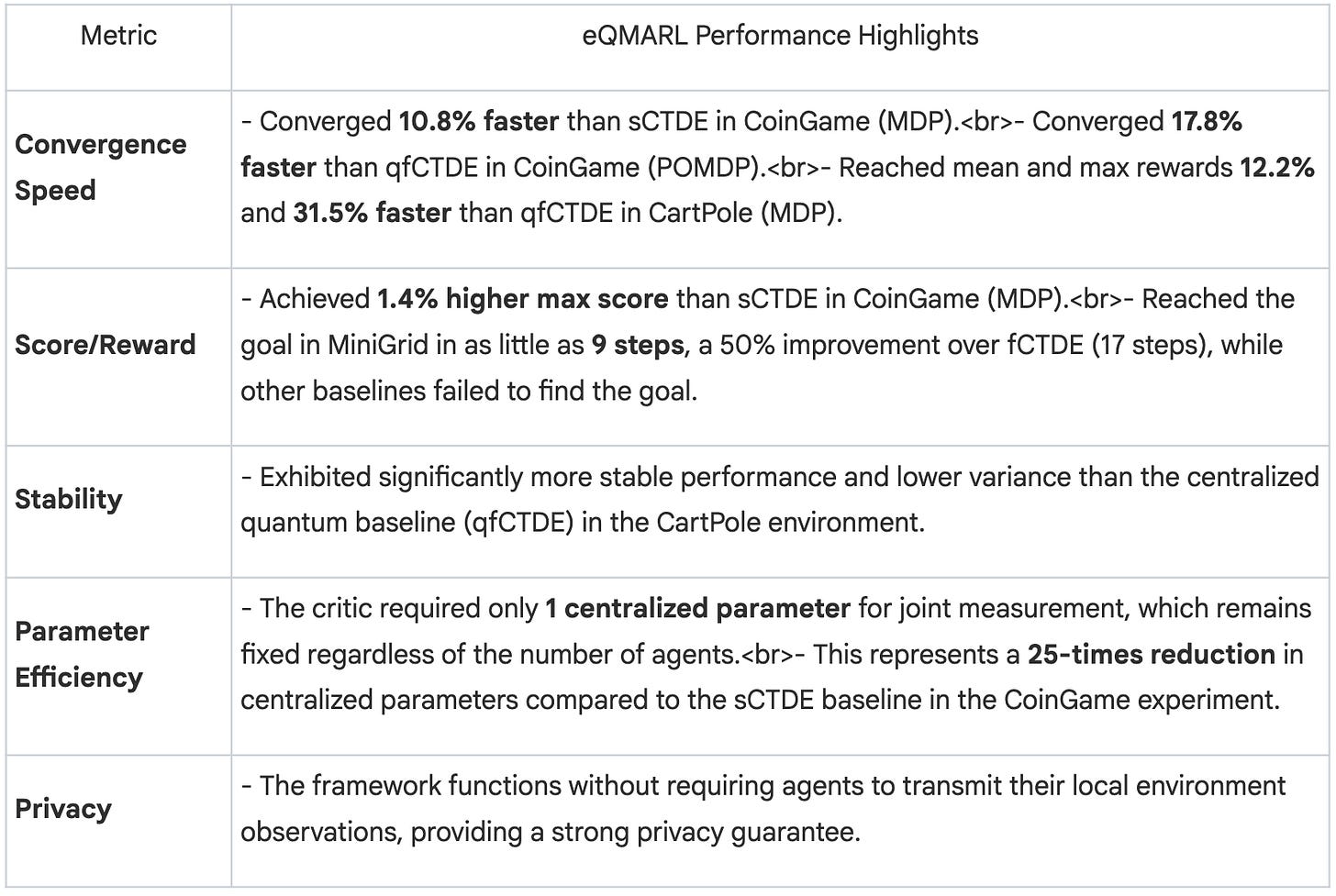

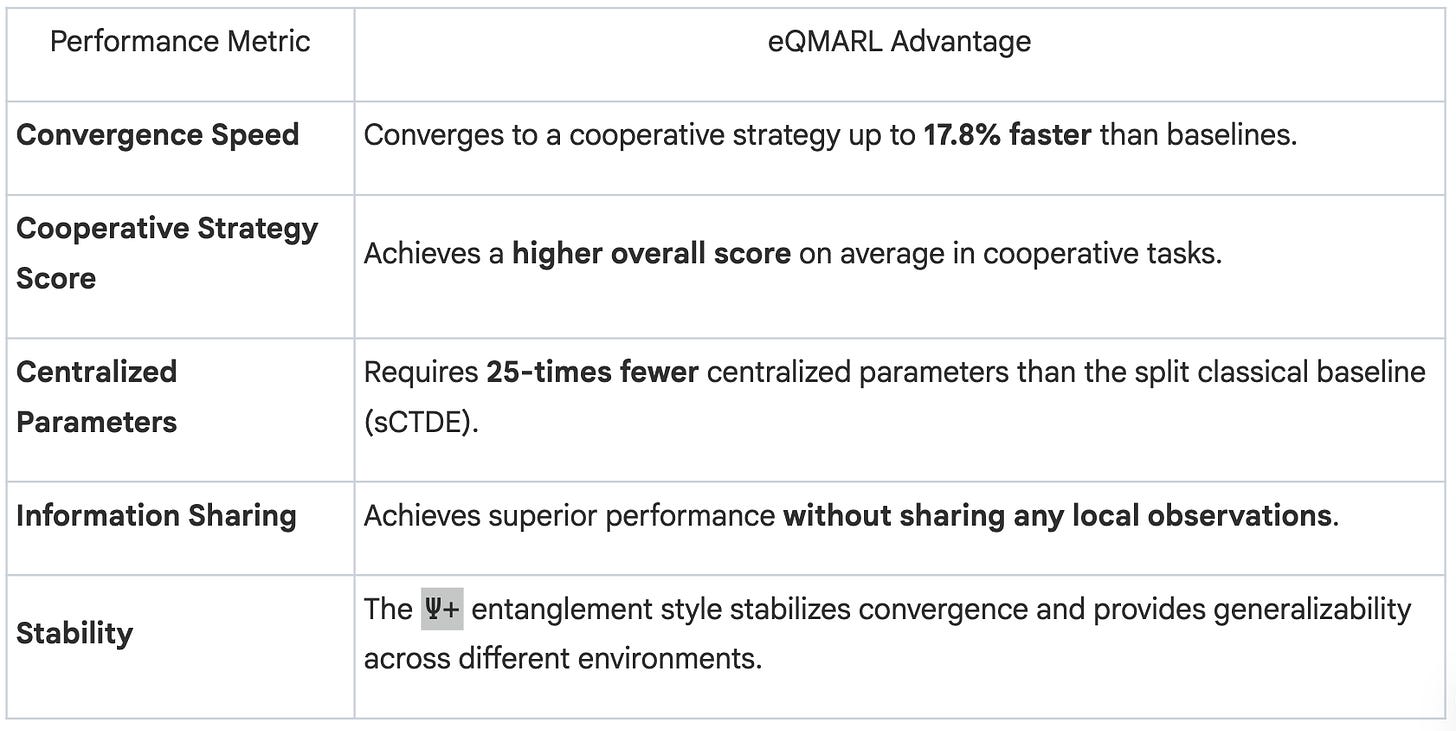

The results are striking. In benchmark tests, this entanglement-based coordination achieved 17.8% faster learning, better stability, and—here’s the kicker—required 25 times fewer parameters in the central server compared to classical approaches. That 25x reduction isn’t just an efficiency gain. It’s a fundamental shift in how the complexity of coordination scales as you add more agents.

What This Actually Means

Let me be clear: we’re not getting quantum-powered AI assistants next year. The technology is still in the research phase. The hardware is expensive, fragile, and limited. There are enormous engineering challenges ahead.

But the implications are worth considering. What these researchers have demonstrated is that it’s possible to build distributed AI systems that:

Keep your data local and private by design, not as an afterthought

Scale efficiently without requiring massive centralized infrastructure

Coordinate implicitly through quantum physics rather than explicit communication

Maintain quality through intelligent filtering rather than authoritarian control

This is a different vision of how AI could work. Not massive data centers owned by tech monopolies, but distributed networks where intelligence emerges from collaboration without coercion. Not surveillance machines that require you to surrender your privacy for convenience, but systems designed from the ground up to protect it.

The Bigger Picture

Margaret Atwood once wrote, “In the spring, at the end of the day, you should smell like dirt.” She was talking about gardening, about the importance of being grounded in the physical world even as our minds soar into abstraction. There’s wisdom there for how we think about technology.

We’ve spent decades building increasingly abstract, centralized, disembodied systems. The cloud. The algorithm. The platform. These metaphors distance us from the physical reality of what we’re creating—massive data centers consuming entire power plants’ worth of electricity, surveillance systems that would make dystopian novelists blush, attention-extraction mechanisms optimized to exploit our psychological vulnerabilities.

What’s interesting about this quantum AI research is that it forces us back to physics. You can’t ignore the physical reality of quantum systems—their fragility, their noise, their need for extreme cooling and isolation. You can’t pretend hardware doesn’t matter. You can’t centralize everything when quantum states decohere if you look at them wrong.

This physical constraint becomes a design principle. The limitations of quantum systems force a different architecture—distributed, privacy-preserving, accepting of diversity. The physics guides the ethics, in a sense.

Doris Lessing wrote about the importance of holding multiple contradictory truths simultaneously, of resisting simplistic narratives. The quantum revolution in AI embodies this. It’s both incredibly powerful and incredibly fragile. It’s both cutting-edge theoretical physics and pragmatic engineering. It’s both a breakthrough and a beginning, with enormous challenges still ahead.

What Comes Next

The researchers themselves outline the questions that define the next decade: How do you scale these systems to thousands or millions of devices? How do you manage aggregate errors in federated training loops? How do you guarantee stability when quantum connections are inherently fragile?

These aren’t just technical questions. They’re questions about what kind of technological future we want to build. Do we double down on centralization, surveillance, and control? Or do we explore architectures that distribute power, preserve privacy, and embrace diversity?

The quantum approach won’t solve everything. No technology does. But it offers a proof of concept that different approaches are possible. That intelligence doesn’t require surveillance. That coordination doesn’t require centralization. That we can build systems that work with physics rather than trying to dominate it.

In a world of increasingly dark technological news—algorithmic discrimination, surveillance capitalism, AI-powered misinformation—it’s rare to find research that offers genuine hope. Not the naive hope of techno-optimism, which assumes all innovation is good. But the grounded hope of seeing smart people working on hard problems with both technical sophistication and ethical awareness.

We won’t know for years whether quantum AI fulfills its promise. But the direction matters. And right now, at least some researchers are pointing toward a future where our machines might be both more powerful and more respectful of our humanity.

That’s worth paying attention to.

Link References

eQMARL: Entangled Quantum Multi-Agent Reinforcement Learning for Distributed Cooperation over Quantum Channels, arXiv (2024).

Towards Heterogeneous Quantum Federated Learning:

Challenges and Solutions. 2025

Episode Links

Available for broadcast on PRX

Other Links to Heliox Podcast

YouTube

Substack

Podcast Providers

Spotify

Apple Podcasts

Patreon

FaceBook Group

STUDY MATERIALS

Briefing

Executive Summary

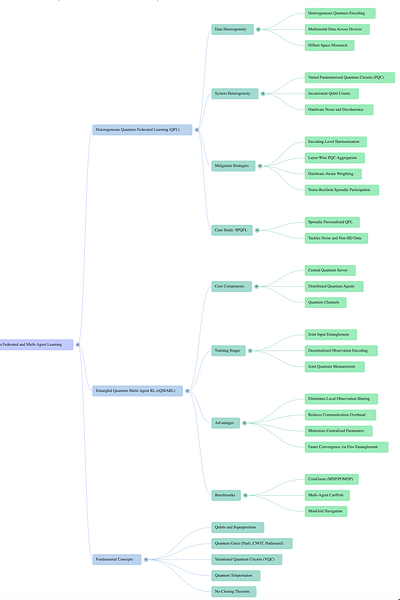

This document synthesizes key findings from two seminal papers advancing the field of distributed quantum machine learning. The research addresses two critical, complementary challenges: achieving robustness in heterogeneous environments and leveraging quantum-native phenomena for efficient collaboration.

The first paper, “Towards Heterogeneous Quantum Federated Learning,” confronts the practical limitations of current Quantum Federated Learning (QFL) frameworks. It argues that the common assumption of client homogeneity is unrealistic and that real-world variances in data distributions, hardware capabilities, and quantum noise levels significantly degrade model performance and stability. The authors systematically categorize these variances into data heterogeneity and system heterogeneity, providing an in-depth analysis of their unique impacts in the quantum domain. To counter these issues, a comprehensive suite of mitigation strategies is proposed, alongside a case study on a Sporadic Personalized QFL (SPQFL) protocol. SPQFL demonstrates significant performance gains by selectively aggregating updates from reliable clients and personalizing models, proving the viability of robust learning in non-ideal quantum networks.

The second paper, “Entangled Quantum Multi-Agent Reinforcement Learning,” introduces a novel paradigm for cooperation in Quantum Multi-Agent Reinforcement Learning (QMARL). It posits that existing QMARL frameworks underutilize quantum mechanics by relying on classical communication for agent coordination. The proposed Entangled QMARL (eQMARL) framework pioneers the use of quantum entanglement as a primary medium for collaboration. By deploying a quantum entangled split critic, the framework couples decentralized agents over a quantum channel, eliminating the need to share private local observations. This approach not only enhances privacy but also drastically reduces classical communication overhead and centralized computational load. Experimental results show that eQMARL converges up to 17.8% faster than state-of-the-art baselines and operates with 25 times fewer centralized parameters, establishing entanglement as a powerful resource for efficient, decentralized cooperation.

Together, these works chart a course toward more practical and powerful distributed quantum intelligence. The first focuses on building resilience against the inevitable imperfections of near-term quantum systems, while the second pioneers a new, fundamentally quantum approach to multi-agent coordination, showcasing pathways to superior performance and efficiency.

--------------------------------------------------------------------------------

Part 1: Addressing Heterogeneity in Quantum Federated Learning (QFL)

Quantum Federated Learning (QFL) merges the privacy-preserving, decentralized training of federated learning with the computational power of quantum computing. However, its practical deployment is hindered by the inherent variability—or heterogeneity—among quantum clients, a challenge largely ignored by existing frameworks.

The Central Challenge of Heterogeneity

Current QFL models often assume that all participating clients are homogeneous, possessing identical quantum hardware, data distributions, and noise characteristics. This assumption breaks down in real-world scenarios, where variances can lead to training instability, slow convergence, and suboptimal model performance. The research identifies and classifies these variances into two primary categories.

1. Data Heterogeneity

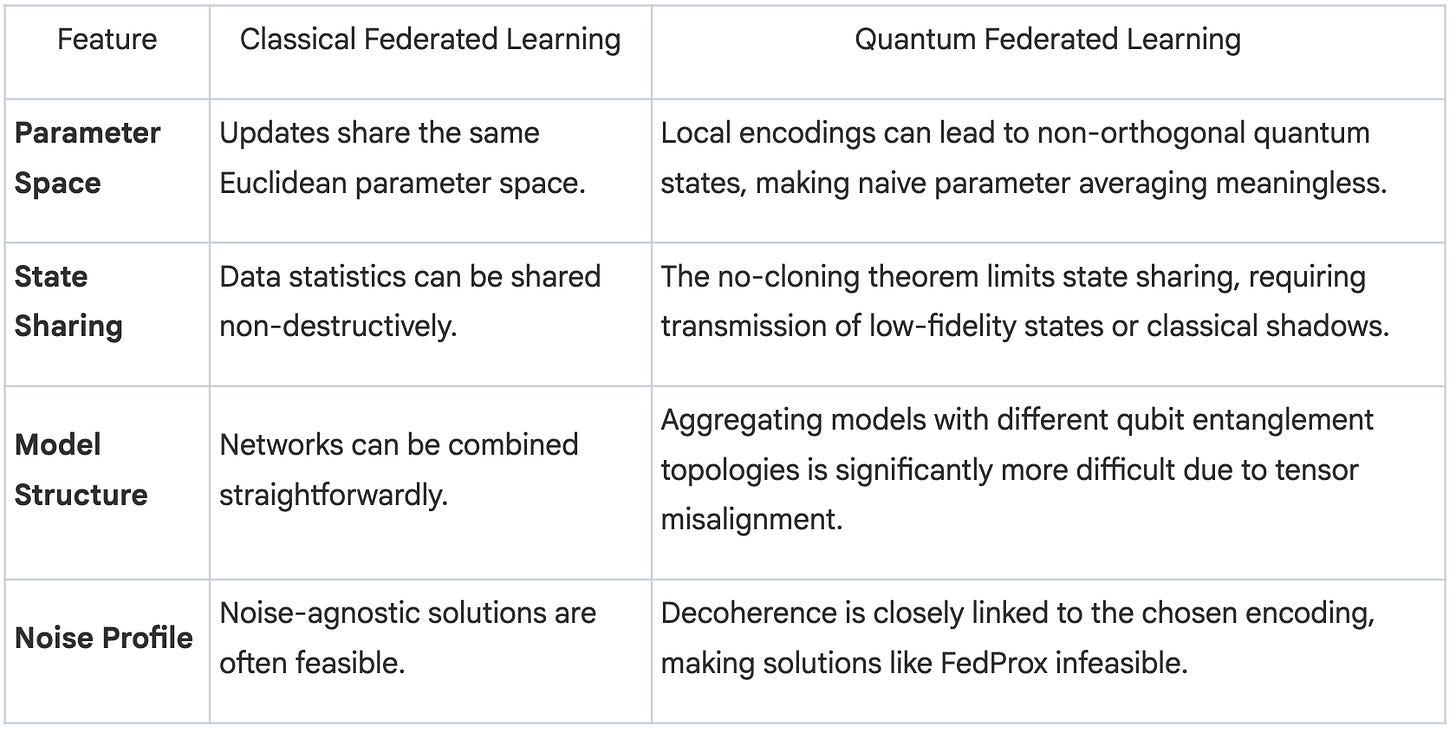

Data heterogeneity in QFL refers to differences in the quantum data representations across clients. This is a more complex issue than in classical federated learning (FL) because it is rooted in the physics of quantum mechanics.

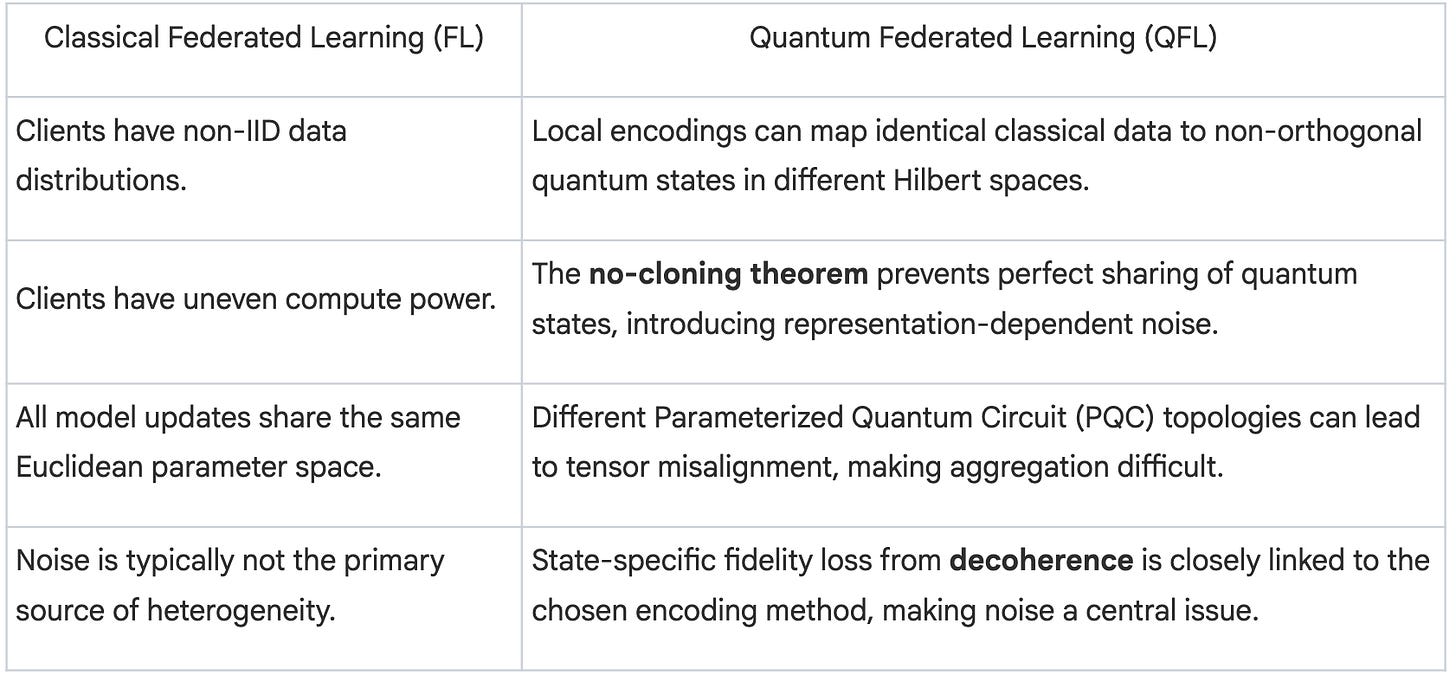

• Classical vs. Quantum Data Heterogeneity: In classical FL, heterogeneity typically involves non-IID data distributions, but all clients operate within a shared Euclidean parameter space. In QFL, the challenges are more fundamental:

◦ Incompatible Bases: Different local encoding methods can map identical classical data to non-orthogonal quantum states, making a naive averaging of parameters theoretically meaningless.

◦ No-Cloning Theorem: This quantum principle prevents the simple sharing of an acquired quantum state, forcing clients to transmit either low-fidelity states or classical summaries, which introduces representation-dependent noise.

◦ Entanglement Mismatch: Different Parameterized Quantum Circuits (PQCs) can entangle qubits according to their unique topology, complicating the aggregation of models that act on different tensor-product factors.

◦ Encoding-Dependent Noise: Decoherence is closely linked to the chosen encoding scheme (amplitude, phase, or basis), making noise-agnostic solutions from classical FL ineffective.

• Sources of Data Heterogeneity:

◦ Heterogeneous Quantum Encoding: Clients may use different methods (basis, amplitude, phase, entanglement) or variations in pre-processing and normalization, leading to inconsistent quantum state representations and divergent feature spaces.

◦ Multimodal Data: Clients may process a mix of data types (quantum states, classical text, images), leading to uneven contributions to the global model and challenges in data fusion and integration. This can skew the global model toward data-rich modalities.

2. System Heterogeneity

System heterogeneity concerns the physical and architectural variances in the quantum hardware used by different clients.

• Heterogeneous PQC Architecture: Clients with varying hardware resources (qubit count, coherence times, gate quality) may employ PQCs of different depths and complexity. This mismatch complicates global aggregation, as parameters from circuits with different expressive capacities do not map directly. It also raises fairness issues, as clients with simpler circuits may contribute less significant updates.

• Varying Qubit Counts: Differences in available qubits directly limit a client’s computational power and its ability to represent complex, high-dimensional data. This can create inconsistencies in parameter size and state dimensions, increasing communication overhead.

• Inherent Quantum Noise: Quantum devices experience unique noise patterns, causing local model updates to be inconsistent. Key sources include:

◦ Decoherence: The loss of quantum state due to environmental interaction varies based on hardware quality, causing clients with higher decoherence to provide noisier, less reliable updates.

◦ Gate Noise: Imperfections in quantum gate operations lead to varying fidelities across devices. Clients with lower fidelity contribute error-prone updates, degrading global model performance.

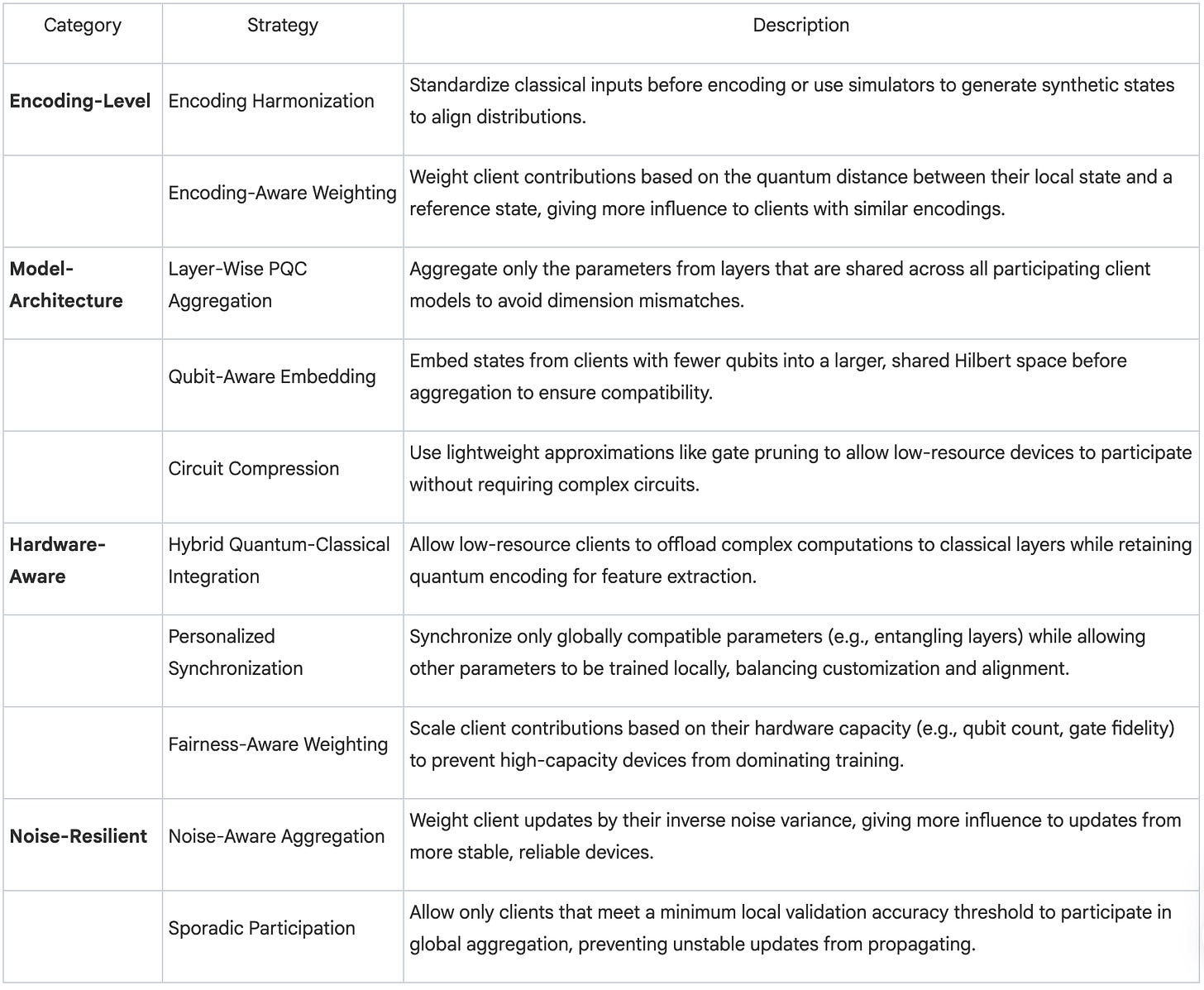

Mitigation Strategies for Heterogeneous QFL

To combat the effects of heterogeneity, the research proposes a four-pronged approach to mitigation.

ase Study: Sporadic Personalized QFL (SPQFL)

A case study featuring the Sporadic Personalized Quantum Federated Learning (SPQFL) protocol demonstrates the practical application of these mitigation principles. SPQFL is designed to jointly tackle quantum noise and non-IID data distributions.

• Core Mechanisms:

1. Sporadic Learning: Before aggregation, each client’s local model is evaluated. Only models that achieve a validation accuracy above a predefined threshold (τ) are sent to the server. This selective participation filters out noisy or suboptimal updates.

2. Personalization: The local update rule includes a regularization term that balances the local model’s parameters with the global model’s parameters, allowing each client to adapt to its unique data while remaining aligned with the collective goal.

• Architecture Overview: As illustrated in the SPQFL architecture diagram, distributed quantum clients train local Quantum Neural Network (QNN) models. These models use PQCs to process encoded data. The updated parameters (ωn,k) are then selectively sent to a central quantum server for global aggregation.

• Performance: SPQFL was benchmarked against state-of-the-art methods (QNN, QCNN, QFL, PQFL, wpQFL) across four datasets (MNIST, Fashion-MNIST, CIFAR-100, Caltech-101).

◦ The results show that SPQFL consistently outperforms existing approaches in both accuracy and convergence speed.

◦ Compared to a standard QFL baseline, SPQFL improved accuracy by 3.03% on MNIST, 2.51% on Fashion-MNIST, 3.71% on CIFAR-100, and 6.25% on Caltech-101.

◦ It also achieved a 1.6% accuracy improvement over the personalized QFL (PQFL) approach across all datasets.

--------------------------------------------------------------------------------

Part 2: Entanglement for Cooperation in Quantum Multi-Agent Reinforcement Learning (QMARL)

While QFL focuses on collaborative model training, Quantum Multi-Agent Reinforcement Learning (QMARL) applies quantum principles to decision-making in decentralized, multi-agent environments. A key challenge in QMARL is fostering cooperation among agents, which has historically relied on classical communication channels for sharing information. The eQMARL framework proposes a paradigm shift, using quantum entanglement as a direct medium for coordination.

The eQMARL Framework

The Entangled QMARL (eQMARL) framework is a novel distributed actor-critic architecture that lies at the intersection of centralized training and decentralized execution. It is designed to facilitate agent collaboration over a quantum channel, thereby eliminating the need for agents to share their local environment observations.

• Core Innovation: The Entangled Split Critic: The central component of eQMARL is a quantum critic network that is “split” across all participating agents. Each agent hosts a local branch of the critic, implemented as a Variational Quantum Circuit (VQC). These distributed branches are intrinsically linked via quantum entanglement.

• Architectural Workflow:

1. Input State Entanglement: A trusted central server prepares sets of entangled qubits. The specific type of entanglement (e.g., Bell states) is a key design choice. These entangled qubits are then distributed to the agents via a quantum channel.

2. Decentralized Observation Encoding: Each agent receives its local observation from the environment and encodes this classical information into its assigned (and already entangled) qubits using its local VQC branch. The parameters of this VQC are tuned locally.

3. Centralized Joint Measurement: The agents transmit their processed qubits back to the central server. The server performs a joint quantum measurement across all qubits from all agents simultaneously. This measurement yields a single joint value, which estimates the collective “goodness” of the agents’ current policies. This value is used to calculate a critic loss.

4. Decentralized Policy Update: The server computes a partial gradient of the loss and transmits this small amount of classical information back to the agents, who then update their local critic and actor (policy) networks.

This design minimizes classical communication to just rewards and a single partial gradient value. It also drastically reduces the computational load on the central server, whose only trainable parameter is a single scaling factor for the joint measurement.

The Role and Impact of Entanglement

Entanglement serves as the cooperative backbone of the eQMARL framework, coupling the agents’ local critic branches without any explicit exchange of local data.

• Comparative Analysis of Entanglement Styles: The framework was tested with four different types of two-qubit Bell state entanglement: Φ+, Φ−, Ψ+, and Ψ−.

◦ Experiments on the CoinGame environment revealed that Ψ+ entanglement consistently delivered the best performance, achieving faster convergence and higher final scores in both fully observable (MDP) and partially observable (POMDP) settings.

◦ In the POMDP setting, Ψ+ achieved a score threshold of 25 10.7% faster than the next-best non-entangled approach. The poorer performance of Φ+ and Φ− suggests that opposite-state entanglement (|01⟩ and |10⟩) provides a more effective coupling mechanism than same-state entanglement (|00⟩ and |11⟩) for this task.

• Implicit Collaboration: By entangling the input qubits, the local encoding process of one agent influences the joint measurement outcome in a way that is correlated with the actions of all other agents. This creates an implicit coordination channel that allows agents to learn a cooperative strategy without ever seeing each other’s observations.

Performance and Efficiency Gains

eQMARL was benchmarked against three state-of-the-art baselines: a fully centralized classical critic (fCTDE), a split classical critic (sCTDE), and a fully centralized quantum critic (qfCTDE). The experiments were conducted across three distinct multi-agent environments: CoinGame, CartPole, and MiniGrid.

--------------------------------------------------------------------------------

Conclusion and Future Directions

The research detailed in this briefing marks significant progress toward realizing practical, large-scale distributed quantum machine learning systems. By tackling the distinct but related challenges of heterogeneity and inter-agent cooperation, these studies provide both foundational robustness and a vision for advanced, quantum-native functionality.

• The work on heterogeneous QFL establishes a clear framework for understanding and mitigating the real-world variances that plague near-term quantum devices. The proposed mitigation strategies and the success of the SPQFL protocol offer a tangible pathway to building QFL systems that are resilient to noise, hardware differences, and data imbalances.

• The eQMARL framework represents a paradigm shift in multi-agent learning, demonstrating for the first time that quantum entanglement can be harnessed as a powerful and efficient resource for coordination. By eliminating the need for observation sharing and minimizing classical communication, eQMARL offers a scalable, private, and high-performing solution for complex cooperative tasks.

Several open research topics emerge from these findings, highlighting the next frontiers for the field:

• Scalability and Robustness: Future work must develop more adaptable and noise-resilient algorithms that can scale to large quantum networks while maintaining efficiency, privacy, and performance.

• Advanced Error Mitigation: Integrating advanced quantum error correction codes and error-aware learning algorithms directly into distributed frameworks is crucial for mitigating not only hardware-level noise but also aggregate errors that arise during federated training.

• Quantum Network Dynamics: Research is needed to understand how unique quantum network phenomena—such as decoherence-induced latency and entanglement generation failures—affect the stability and performance of distributed learning systems.

• Hardware and Simulation Overheads: The computational complexity of simulating large quantum systems on classical hardware remains a bottleneck. Continued progress in both quantum hardware and simulation techniques is essential for validating these frameworks at scale.

Quiz & Answer Key

Answer each of the following questions in 2-3 sentences based on the provided source context.

1. What is Quantum Federated Learning (QFL), and what primary challenge does it aim to solve compared to conventional Quantum Machine Learning (QML)?

2. The “Towards Heterogeneous QFL” paper divides heterogeneity into two main categories. What are they, and what does each one generally refer to?

3. Explain the concept of a split quantum critic as implemented in the eQMARL framework. How does it facilitate cooperation between agents?

4. What is inherent quantum noise in QFL systems, and what are its three primary sources mentioned in the text?

5. How does the eQMARL framework reduce classical communication overhead and centralized computational burden compared to baseline models?

6. According to the SPQFL case study, what two design choices contribute to its superior performance in accuracy and convergence speed?

7. What is the fundamental difference between data heterogeneity in classical Federated Learning (FL) and Quantum Federated Learning (QFL)?

8. In the eQMARL experiments, which Bell state entanglement scheme generally resulted in better performance, and what does this suggest about the effectiveness of same-state versus opposite-state entanglement?

9. Describe the “Sporadic Participation” mitigation strategy for noise-resilient QFL. How does it work to minimize error propagation?

10. What is a Variational Quantum Circuit (VQC), and what are its main components as described in the eQMARL paper?

--------------------------------------------------------------------------------

Answer Key

1. What is Quantum Federated Learning (QFL), and what primary challenge does it aim to solve compared to conventional Quantum Machine Learning (QML)? Quantum Federated Learning (QFL) is a machine learning approach that combines quantum computing with federated learning to perform tasks across distributed networks. It addresses the significant privacy concerns and high communications overhead of conventional QML, where data is typically collected and processed on a central server. By training models locally on distributed quantum devices and aggregating only the model parameters, QFL maintains data privacy and reduces data transfer needs.

2. The “Towards Heterogeneous QFL” paper divides heterogeneity into two main categories. What are they, and what does each one generally refer to? The two categories are data heterogeneity and system heterogeneity. Data heterogeneity refers to differences in the representations of quantum data between clients, such as variations in quantum encoding methods or data distributions. System heterogeneity refers to variances in the quantum hardware between clients, including differences in qubit count, noise levels, coherence times, and gate fidelities.

3. Explain the concept of a split quantum critic as implemented in the eQMARL framework. How does it facilitate cooperation between agents? The split quantum critic in eQMARL is a joint value function estimator that is spread across multiple agents as a split neural network, with each agent’s local Variational Quantum Circuit (VQC) serving as a branch. It facilitates cooperation by coupling the agents’ localized observation encoders using entangled input qubits over a quantum channel. This allows agent policies to be tuned through joint value estimation via joint quantum measurements, eliminating the need for agents to explicitly share their local observations.

4. What is inherent quantum noise in QFL systems, and what are its three primary sources mentioned in the text? Inherent quantum noise is the collection of errors and irregularities that occur in quantum systems and vary between quantum devices, causing local model updates to become inconsistent. The three primary sources mentioned are decoherence, which is the loss of a quantum state due to environmental interactions; gate noise, caused by hardware faults and control imperfections during qubit calculations; and measurement irregularities.

5. How does the eQMARL framework reduce classical communication overhead and centralized computational burden compared to baseline models? The eQMARL framework reduces classical communication overhead by using a quantum channel and entanglement to couple agents, eliminating the need to send local environment observations or intermediate neural network activations over classical channels. It reduces the centralized computational burden because the joint value is estimated via a joint quantum measurement that relies on only a single learned scaling parameter on the central server, which remains fixed regardless of the number of agents.

6. According to the SPQFL case study, what two design choices contribute to its superior performance in accuracy and convergence speed? The two key design choices are a regularization-based approach and a sporadic (occasional) learning mechanism. The regularization penalizes overfitting in local quantum circuits and stabilizes training for heterogeneous clients. The sporadic learning mechanism prevents noisy or sub-par updates from corrupting the global model by only allowing local models to be sent to the server if their validation accuracy is above a predetermined threshold.

7. What is the fundamental difference between data heterogeneity in classical Federated Learning (FL) and Quantum Federated Learning (QFL)? In classical FL, clients have non-IID data but all model updates share the same Euclidean parameter space. In contrast, data heterogeneity in QFL stems from the physics of Hilbert space, where local encodings can convert identical classical data into non-orthogonal quantum states, making a naive parameter average theoretically meaningless. Additionally, QFL heterogeneity is affected by quantum-specific issues like the no-cloning theorem, representation-dependent noise, and decoherence linked to the encoding method.

8. In the eQMARL experiments, which Bell state entanglement scheme generally resulted in better performance, and what does this suggest about the effectiveness of same-state versus opposite-state entanglement? The Ψ+ entanglement scheme consistently demonstrated the best performance across both MDP and POMDP dynamics in the CoinGame environment. The superior performance of Ψ+ (an opposite-state entanglement of |01⟩ and |10⟩) compared to the poorer performance of Φ+ and Φ- (same-state entanglements of |00⟩ and |11⟩) suggests that opposite-state entanglement results in more effective coupling of agents.

9. Describe the “Sporadic Participation” mitigation strategy for noise-resilient QFL. How does it work to minimize error propagation? Sporadic participation is a strategy where only clients that fulfill a specific validation criterion, such as local accuracy meeting a threshold τ, are permitted to join the aggregation round. This adaptive participation prevents unstable updates from clients experiencing noise spikes from being included in the global model. By filtering out unreliable contributions, this method minimizes error propagation throughout the QFL system.

10. What is a Variational Quantum Circuit (VQC), and what are its main components as described in the eQMARL paper? A Variational Quantum Circuit (VQC) is a hybrid quantum-classical model used as a branch of the split critic in the eQMARL framework. As described, it consists of L cascaded layers, each containing three main operator components: a trainable variational layer for parameterized Pauli-axis rotations, a non-trainable circular entanglement layer to bind neighboring qubits, and a trainable encoding layer to map classical features into a quantum state.

Essay Questions

Answer the following questions in a detailed essay format, drawing upon the comprehensive information provided in the source context. No answers are provided for this section.

1. Discuss the concept of heterogeneity in Quantum Federated Learning (QFL) as presented in the source material. Contrast the challenges of data heterogeneity and system heterogeneity, providing specific examples for each, and explain why mitigation strategies from classical FL are often inadequate.

2. Detail the architecture and workflow of the proposed Entangled QMARL (eQMARL) framework. Your explanation should cover the roles of the central server and decentralized agents, the process of joint input entanglement, the design of the decentralized split critic, and the function of centralized joint measurement.

3. The “Towards Heterogeneous QFL” paper outlines four categories of mitigation strategies. Choose two of these categories (Encoding-Level, Model-Architecture, Hardware-Aware, Noise-Resilient), and for each, describe two specific techniques mentioned in the text, including any mathematical formulas provided.

4. Analyze the experimental results from the eQMARL paper’s CoinGame experiments. Compare the performance of eQMARL-Ψ+ against the classical (fCTDE, sCTDE) and quantum (qfCTDE) baselines in both MDP and POMDP settings, focusing on convergence speed and overall score. What do these results imply about the “quantum advantage” in this context?

5. Based on the “Conclusion and Open Research Topics” section of the QFL paper, identify and elaborate on the three key open research areas for improving heterogeneous QFL frameworks. For each area, explain the core problem and the direction future research should take.

Glossary of Key Terms

Actor-Critic Architecture

A popular approach in multi-agent reinforcement learning that tunes policies (actors) using an estimator that evaluates how good or bad the policy is at any given state (the critic).

Amplitude Encoding

A method of quantum encoding that represents classical data in the amplitudes of quantum states for compact storage.

Bell States

A set of four specific, maximally entangled two-qubit quantum states ({

Centralized Training with Decentralized Execution (CTDE)

A multi-agent reinforcement learning framework where decentralized agent policies learn using a joint value function at training time, often deployed on a central server, while agents interact with the environment independently during execution.

Data Heterogeneity (in QFL)

Refers to differences in the representations of quantum data between clients in a QFL network. This can arise from different quantum encoding techniques, data distributions, or hardware variations affecting data processing.

Decoherence

A process in quantum systems where qubits lose their quantum state (superposition or entanglement) as a result of environmental interactions. It is a primary source of inherent quantum noise.

Entangled QMARL (eQMARL)

A novel, distributed actor-critic framework for QMARL that facilitates agent collaboration over a quantum channel. It uses a quantum entangled split critic to eliminate local observation sharing and reduce classical communication overhead.

Entanglement

A quantum mechanical property where the states of two or more qubits become intrinsically linked, regardless of their physical separation. If a combined quantum system’s state cannot be separated into a tensor product of its individual components, it is said to be entangled.

Federated Averaging (FedAvg)

A method used in federated learning for model aggregation where trainable parameters from local models are extracted, translated to classical data, and averaged to create an updated global model.

Gate Noise

A source of quantum noise introduced by hardware faults, control imperfections, and external interference during the operation of quantum gates on qubits.

Heterogeneity (in QFL)

The inherent variability that exists in real-world quantum systems, which is classified into two types: data heterogeneity (variances in quantum data distributions and encoding) and system heterogeneity (variances in quantum hardware).

Inherent Quantum Noise

Errors that occur in quantum systems due to quantum decoherence, gate noise, and measurement irregularities, which vary between quantum devices and make local model updates inconsistent.

Joint Quantum Measurement

A process in eQMARL where a centralized server performs a measurement across all qubits from all agents in the system simultaneously. This is used to estimate a joint value for the locally-encoded observations.

Markov Decision Process (MDP)

A mathematical framework for modeling decision-making in environments with full information, where an agent’s observations represent the complete state of the environment.

Noisy Intermediate-Scale Quantum (NISQ) Devices

The current generation of distributed quantum devices which have a limited number of qubits and are susceptible to decoherence and other forms of noise.

Parameterized Quantum Circuit (PQC)

A quantum model comprised of quantum gates controlled by adjustable parameters, typically implemented as rotations. By altering these parameters, PQCs can process data, explore Hilbert spaces, and extract patterns.

Partially Observable Markov Decision Process (POMDP)

A mathematical framework for modeling decision-making in environments with partial information, where the full state is hidden and agents receive local observations that may not represent the complete environment state.

Pauli Gates (X, Y, Z)

A set of fundamental single-qubit quantum gates. The Pauli-X gate is a quantum variant of the NOT gate, and all three are used in parameterized rotations (RX, RY, RZ).

Quantum Bit (Qubit)

The fundamental unit of quantum computation. A qubit can exist in a superposition of 0 and 1 simultaneously, and its state is represented as a 2-dimensional unit vector in a complex Hilbert space.

Quantum Channel

A communication channel that allows for the direct transfer of quantum states, for example through quantum teleportation. This preserves quantum coherence but requires consistent entanglement distribution and high-fidelity connections.

Quantum Encoding

The process of transforming classical data into quantum states using quantum gates for computation. Common methods include basis, amplitude, phase, and entanglement encoding.

Quantum Federated Learning (QFL)

An approach that combines quantum computing with federated learning to perform machine learning tasks across distributed networks, enabling decentralized model training while maintaining data privacy.

Quantum Gate

The basic reversible operations that alter the states of qubits. Examples include Pauli-X, Hadamard (for superposition), and CNOT (for entanglement).

Quantum Machine Learning (QML)

A field that integrates quantum physics with machine learning techniques, leveraging quantum phenomena like superposition and entanglement to process complex data at high speeds.

Quantum Multi-Agent Reinforcement Learning (QMARL)

A variant of QRL that applies quantum computing to scenarios with multiple learning agents, with potential synergies between decentralized cooperation and quantum entanglement.

Quantum Neural Network (QNN)

A quantum model where quantum layers, often built with PQCs, replace classical layers to learn complex patterns.

Quantum Reinforcement Learning (QRL)

A class of quantum machine learning for decision-making that exploits the performance and data encoding enhancements of quantum computing.

Split Quantum Critic

A key component of the eQMARL framework, where a joint value function estimator (the critic) is implemented as a quantum split neural network. Each agent’s local VQC serves as a branch, and the branches are coupled via entangled input qubits over a quantum channel.

Sporadic Personalized Quantum Federated Learning (SPQFL)

A proposed QFL protocol designed to jointly tackle quantum noise and non-IID data distributions. It uses a sporadic participation mechanism and regularization-based personalization to improve model performance.

Superposition

A fundamental property of quantum mechanics where a quantum system, such as a qubit, can exist in a combination of multiple states (e.g., both 0 and 1) at the same time.

System Heterogeneity (in QFL)

Refers to variances in quantum hardware between clients, such as differences in qubit count, coherence times, error rates, and gate fidelities, impacting local training performance.

Variational Quantum Circuit (VQC)

A hybrid quantum-classical model that uses parameterized quantum gates optimized via classical methods like gradient descent. In the context of the provided sources, it is used to build quantum neural networks and the branches of the split critic.

Timeline of Main Events

The field of distributed quantum machine learning is not defined by a single breakthrough but by a continuous progression of evolving challenges and the innovative solutions developed to overcome them. Each solution, while powerful, tends to reveal a deeper, more complex problem lying beneath. This document traces this technological narrative, charting the course from the initial limitations of centralized Quantum Machine Learning (QML), through the intricate problems of hardware and data heterogeneity in Quantum Federated Learning (QFL), to the advanced complexities of fostering cooperation between intelligent quantum agents.

1. The Foundational Challenge: From Centralized QML to Distributed QFL

Quantum Machine Learning (QML) first emerged as a discipline of immense promise, leveraging quantum phenomena such as superposition and entanglement to process complex, large-scale data at unprecedented speeds. By integrating quantum physics with advanced machine learning, QML frameworks offered a new paradigm for computation. However, the conventional, centralized architecture of early QML models presented fundamental barriers to practical deployment.

These limitations effectively stalled the real-world application of QML in scenarios involving sensitive or continuous data processing. The primary problems were:

• Privacy Concerns: In most conventional QML frameworks, data must be collected and processed on a central server. This model raises significant privacy issues, exposing potentially sensitive information to various data-based attacks.

• Communication Overhead: The transfer of large-scale, high-dimensional data from distributed sources to a central processor creates substantial communication overhead. This bottleneck leads to slower overall performance and presents significant scalability challenges.

To address these foundational challenges, researchers developed Quantum Federated Learning (QFL). This promising approach redesigns the learning process, combining the principles of classical federated learning with the power of quantum computing. The core concept of QFL is to perform machine learning tasks across a distributed network of quantum devices, or “clients.” Each client trains a local model on its own data, and only the model parameters—not the raw data—are sent to a central server for aggregation. This method inherently protects data privacy and dramatically reduces communication overhead.

However, once the QFL framework solved the initial problems of privacy and data transfer, its practical implementation uncovered the next major hurdle on the technological timeline: the profound and multifaceted challenge of heterogeneity.

2. The First Major Hurdle: Deconstructing Heterogeneity in QFL

While QFL provided a robust architectural solution to the problems of centralized QML, its deployment in real-world networks revealed a critical new challenge: the inherent and significant differences between quantum clients. Understanding and mitigating this heterogeneity became strategically imperative for the field to advance.

The heterogeneity found in QFL is fundamentally different and far more complex than that in classical Federated Learning (FL). In classical FL, heterogeneity typically arises from non-identical data distributions or varied computational capacities among clients, but all model updates exist within a shared Euclidean parameter space. In contrast, QFL heterogeneity is rooted in the physics of quantum mechanics itself. Because local encodings can map identical classical data to non-orthogonal quantum states, updates from different clients may have incompatible bases in Hilbert space, rendering naïve parameter averaging mathematically meaningless. Furthermore, the no-cloning theorem prevents the perfect sharing of quantum states, forcing clients to transmit classical summaries and introducing representation-dependent noise with no classical equivalent. Aggregating models that act on various tensor-product factors is also significantly more difficult than combining classical networks.

To systematically address this, heterogeneity in QFL can be classified into two primary categories: data heterogeneity and system heterogeneity.

2.1 Data Heterogeneity: The Challenge of Inconsistent Representations

Data heterogeneity in QFL refers to the differences in how quantum data is represented and structured across clients. This makes it exceptionally difficult to align and aggregate local models into a cohesive global model. The primary sources of this challenge include:

1. Heterogeneous Quantum Encoding: Clients may use distinct methods to encode classical data into quantum states, such as basis, amplitude, or phase encoding. Even when clients use the same encoding strategy, differences in data pre-processing or normalization can result in inconsistent quantum state representations. This leads to divergent feature spaces, where local models learn different quantum representations from the same underlying data, complicating the creation of a globally consistent model.

2. Multimodal Data Across Devices: In many real-world scenarios, clients manage varied types of data, or modalities. This can include quantum states and measurement outcomes alongside classical inputs like text and images. This diversity complicates data integration, as modality-specific noise and formatting incompatibilities propagate during global training. Consequently, the global model can become skewed toward clients with richer or higher-quality data, impairing its ability to generalize and increasing both communication and computational costs.

2.2 System Heterogeneity: The Challenge of Hardware Variances

System heterogeneity refers to the physical variances in quantum hardware and computational capabilities among clients in a QFL network. These differences mean that clients cannot perform computations equally, leading to imbalances in training time, update accuracy, and operational capacity. Key sources include:

1. Heterogeneous PQC Architectures: Clients often use Parameterized Quantum Circuits (PQCs)—the quantum analog of neural network layers—with varying depths and structural complexity. These differences are typically dictated by hardware limitations, such as qubit availability, coherence times, and gate quality. A low-resource client may only support a shallow PQC, while a more advanced device can run a deeper, more expressive circuit. This mismatch hampers global aggregation, as parameters learned from circuits with different capacities do not map directly.

2. Varying Number of Qubits: The number of available qubits can differ significantly across client devices. Clients with fewer qubits have lower computational power and a more limited capacity to represent high-complexity data. This variance also creates communication overhead, as differing qubit counts lead to inconsistent parameter sizes and quantum state dimensions across the network.

3. Inherent Quantum Noise: Quantum processors are intrinsically noisy, and each device experiences unique noise patterns. This inconsistency means that local model updates from different clients are of varying quality. The main types of noise are:

◦ Decoherence: The loss of a quantum state due to interactions with the environment. Clients with higher decoherence rates contribute noisier and less reliable parameter updates, slowing global convergence.

◦ Gate Noise: Errors introduced by hardware faults and imperfections in the control of quantum gates. Variances in gate fidelities result in unequal local training quality.

◦ Measurement Irregularities: Discrepancies in the process of extracting classical results from a quantum system add another layer of inconsistency to the updates.

The formal identification and categorization of these heterogeneity challenges spurred the development of a range of targeted mitigation strategies designed to make QFL robust and practical.

3. Countermeasures: The Development of Mitigation Strategies for Heterogeneity

In response to the critical challenge of heterogeneity, researchers have developed a portfolio of mitigation strategies. These techniques are designed to create stability and fairness in QFL networks by intervening at different levels of the learning process, whether at the point of data encoding, within the model architecture, by accounting for hardware differences, or by building in resilience to quantum noise.

3.1 Encoding-Level Mitigations

These strategies address inconsistencies in how data is represented in quantum states.

• Encoding Harmonization: Standardizing classical inputs across all clients before they are encoded into quantum states to align data distributions.

• Encoding-Aware Weighting: Weighting client contributions during aggregation based on the similarity of their quantum state (ρi) to a global reference state (ρg), as measured by a quantum distance metric, d(·,·): wi = exp(−αd(ρi,ρg)) / ∑ exp(−αd(ρj,ρg)).

3.2 Model-Architecture Strategies

These techniques manage structural incompatibilities between the quantum models on different clients.

• Layer-Wise PQC Aggregation: Aggregating only the parameters (θli) from PQC layers shared by all clients (Cl) with depth greater than or equal to l to prevent dimension mismatches: θlg = (1/|Cl|) ∑ θli.

• Qubit-Aware Embedding: Embedding the quantum state (ρi) from a client with fewer qubits into a larger, common Hilbert space using a mapping (Ui) before aggregation: ρ̃i = UiρiU†i.

• Circuit Compression: Using lightweight approximations of complex quantum circuits, such as through gate pruning or variational approximation, to allow low-resource devices to participate in training.

3.3 Hardware-Aware Mitigations

These solutions account for the physical differences in client hardware capabilities.

• Hybrid Quantum–Classical Integration: Allowing low-resource clients to delegate computationally intensive tasks to classical layers while still using quantum layers for feature extraction.

• Personalized Synchronization: Synchronizing only a subset of globally compatible parameters (ωtg) while allowing other parameters (ωti) to be customized locally on each client device, balancing alignment with a regularization factor λ: ωt+1i = ωti − η(gti + λ(ωti −ωtg)).

• Fairness-Aware Weighting: Scaling client contributions based on their effective hardware capacity, such as qubit count (qi) and gate fidelity (ϕi), to prevent high-capacity devices from dominating the global model: wi = (qi·ϕi) / ∑(qj·ϕj).

3.4 Noise-Resilient Strategies

These methods are designed to counteract the destabilizing effects of inconsistent quantum noise.

• Noise-Aware Aggregation: Weighting client updates (θi) based on their inverse noise variance (σ²i), giving more influence to updates from more stable, less noisy devices: θg = (∑ (1/σ²i)θi) / (∑ (1/σ²i)).

• Sporadic Participation: Allowing only clients whose local accuracy (A(t)i) meets a minimum performance threshold (τ) in a given training round to participate in the global aggregation: i ∈ C(t) ⇐⇒ A(t)i ≥ τ.

While these strategies have greatly improved the robustness of general QFL systems, the progression of the field has opened a new frontier focused on more specialized applications, giving rise to the next major challenge: facilitating effective cooperation in multi-agent quantum systems.

4. The Next Frontier: The Challenge of Distributed Cooperation in QMARL

As QFL frameworks matured, a specialized and highly promising area emerged: Quantum Multi-Agent Reinforcement Learning (QMARL). This subfield aims to train multiple intelligent agents to collaborate on complex tasks. With this evolution came the next great challenge on the timeline—moving beyond mitigating passive system differences to actively engineering efficient cooperation.

The core challenge in any distributed multi-agent environment is balancing the need for agent coordination against the communication overhead and computational cost required to share information. To learn a group policy, agents must have some awareness of each other’s states or actions. However, constant classical communication can quickly become a bottleneck, negating the speed advantages of quantum computation.

Prior QMARL frameworks have been limited in their approach to this problem. They have predominantly relied on classical methods for coordination, such as:

• Using classical communication channels to transmit local observations.

• Employing shared replay buffers where agents pool their experiences.

• Relying on centralized global networks that process all agent data.

The key critique of these approaches is that they under-utilize the intrinsic quantum resources available in a QMARL setting. Specifically, they treat the quantum components as mere replacements for classical neural networks but fail to leverage the quantum channel and the phenomenon of entanglement as a direct medium for cooperation. This limitation inspired the development of a novel framework designed to harness these uniquely quantum properties to solve the cooperation problem.

5. A Frontier Solution: Leveraging Quantum Entanglement via the eQMARL Framework

The entangled QMARL (eQMARL) framework was proposed as a novel solution to the cooperation challenge, fundamentally redesigning how agents collaborate. Its unique architecture facilitates coordination directly over a quantum channel by leveraging entanglement, thereby eliminating the need for agents to share their local environmental observations.

The core architectural components of the eQMARL framework are:

1. Entangled Split Critic: The framework deploys a joint value function estimator, known as a quantum critic, that is physically spread across the agents. Each agent hosts a local branch of this critic network in the form of a Variational Quantum Circuit (VQC).

2. Joint Input Entanglement: A trusted central server prepares an entangled input state that couples the agents’ local critic branches. This is achieved using a variation of Bell state entanglement, creating a quantum mechanical link between the agents’ VQCs over a quantum channel.

3. Decentralized Observation Encoding: Each agent independently collects its local observation from the environment. It then encodes this observation into its assigned qubits using its local VQC branch. Crucially, the observation itself is never transmitted.

4. Centralized Joint Measurement: The locally-encoded qubits from all agents are sent back to the central server. The server performs a joint measurement across all qubits simultaneously to estimate a single, joint value for the collective observations of all agents.

The impact of this architecture is transformative. It dramatically reduces classical communication overhead, as only minimal information (rewards and partial gradients) is transmitted classically. It also minimizes the computational burden on the central server and, most importantly, eliminates the need to share local observations, enhancing both privacy and efficiency. This design represents a paradigm shift from using classical communication for coordination to leveraging the physical properties of quantum mechanics itself.

6. Validating the Frontier: Performance and Implications of Entangled Cooperation

To be considered a viable solution, the theoretical advantages of the eQMARL framework required empirical validation against established baselines. Its performance was tested in various multi-agent environments, including CoinGame, CartPole, and MiniGrid, under conditions of both full information (Markov Decision Process, or MDP) and partial information (Partially Observable MDP, or POMDP).

The key experimental findings demonstrate a clear performance advantage for eQMARL when compared to its baselines: a split classical framework (sCTDE) and a fully centralized quantum framework (qfCTDE). The results are summarized below.

These results validate that leveraging entanglement as a medium for cooperation is not only feasible but also highly effective. The eQMARL framework demonstrates that agents can learn a superior cooperative strategy faster and more efficiently, without the privacy and overhead costs associated with classical coordination methods.

7. Conclusion: The Trajectory of Challenges and Future Research Directions

The developmental trajectory of distributed quantum machine learning is a clear and logical progression of problem-solving. The initial privacy and communication overhead of centralized QML led to the creation of the QFL architecture. The practical deployment of QFL then revealed the deep-seated challenge of data and system heterogeneity, prompting the development of a suite of targeted mitigation strategies. Finally, the push toward more advanced applications like multi-agent systems introduced the problem of efficient cooperation, which was addressed by pioneering the use of quantum entanglement in frameworks like eQMARL.

This journey from one challenge to the next highlights the field’s dynamic nature and points toward the next set of hurdles on the horizon. Based on the limitations of current techniques, several open research topics will define the future of distributed quantum learning:

• Scalability and Robustness: There is a pressing need for more adaptable and noise-resilient algorithms that can maintain learning efficiency in large-scale quantum networks with diverse clients, while preserving privacy and communication efficiency.

• Advanced Error Mitigation: Future QFL frameworks must integrate sophisticated quantum error correction with innovative error-aware learning algorithms to address not only hardware-level errors but also the aggregate errors that arise during federated training.

• Impact of Quantum Network Dynamics: Quantum networks are subject to unique dynamics, such as decoherence-induced latency and entanglement generation failures. Investigating how these quantum-specific network properties affect the stability and performance of QFL systems is critical for designing more robust architectures.

Cast of Characters

1. Introduction: The Stage and the Saga

The emerging field of distributed Quantum Machine Learning (QML) is a new frontier, a complex and exciting stage populated by a fascinating cast of concepts, challenges, and technologies. This guide serves as a dramatis personae for this unfolding technological narrative, defining the key players—the heroes, villains, and fundamental forces—that are shaping its plot. We will explore two primary sagas: the quest for robust Quantum Federated Learning (QFL) amidst the imperfections of real-world quantum hardware and data, and the pioneering of cooperative Quantum Multi-Agent Reinforcement Learning (QMARL) by harnessing fundamental quantum phenomena. Understanding these characters is essential for anyone seeking to navigate the future of decentralized, intelligent systems and to appreciate the intricate drama playing out at the intersection of quantum computing and artificial intelligence.

2. The Protagonists: Core Learning Paradigms

At the heart of our story are two protagonist paradigms: Quantum Federated Learning (QFL) and Entangled Quantum Multi-Agent Reinforcement Learning (eQMARL). These frameworks represent ambitious efforts to combine the power of quantum computing with the principles of distributed machine learning, each aiming to solve critical problems of privacy, scale, and cooperation. While they share a common quantum foundation, their missions and methods are distinct, leading them on different but equally compelling journeys.

2.1. Quantum Federated Learning (QFL): The Decentralized Idealist

• Character Role: QFL is a paradigm that synergizes quantum computing with federated learning. Its purpose is to perform machine learning tasks across distributed networks of quantum devices, enabling collaborative model training without centralizing sensitive client data, thereby preserving privacy.

• Modus Operandi: The QFL protocol follows a precise, four-step procedure for each round of collaborative learning:

1. Quantum Encoding: Clients transform their classical local data into quantum states for processing. This is a crucial first step, using methods such as basis, amplitude, phase, or entanglement encoding to represent classical information in the language of qubits.

2. Local Model Training: Each client independently trains a local quantum or hybrid quantum-classical model. These models, often implemented as Variational Quantum Circuits (VQCs) or Quantum Neural Networks (QNNs), learn from the client’s local data.

3. Quantum Model Sharing: Clients share their trained local models with a central server for aggregation. This can be done through two distinct channels. Using classical channels, clients extract and transmit classical parameters (like the rotation angles of quantum gates). Using quantum channels, clients can directly transfer the quantum states of their models via quantum teleportation, preserving quantum correlations like entanglement.

4. Quantum Model Aggregation: The central server integrates the local models into an improved global model. Similar to sharing, this can be a classical parameter aggregation (using algorithms like FedAvg to average gate angles) or a more advanced quantum state aggregation that directly combines the quantum states to maintain coherence.

• Core Motivation: QFL’s primary mission is to leverage the unique benefits of quantum computation—such as faster processing and more effective handling of complex data—to enhance the efficiency and scalability of decentralized learning, all while upholding the core federated promise of data privacy.

2.2. Entangled QMARL (eQMARL): The Cooperative Innovator

• Character Role: eQMARL is a novel distributed actor-critic framework for multi-agent reinforcement learning. It is engineered to foster deep collaboration between learning agents by leveraging a quantum channel as an active coordination medium, not just a data pipe.

• Core Innovation: eQMARL’s defining feature is its use of a quantum entangled split critic. In this unique architecture, the critic network—which evaluates the agents’ actions—is physically spread across the agents. The individual branches of this critic are coupled not by classical messages, but through entangled input qubits prepared by a central server. This allows agents to coordinate implicitly, their learning processes intrinsically linked by a fundamental quantum property.

• Key Advantages: This innovative approach yields several significant benefits:

◦ Eliminates Observation Sharing: Entanglement serves as the coordination medium, allowing agents to cooperate effectively without ever needing to explicitly share their local environmental data with each other or a central server, thus enhancing privacy and efficiency.

◦ Reduces Communication Overhead: By using the quantum channel for coordination, the framework significantly cuts down on the classical communication required by other MARL approaches, which often rely on transmitting intermediate model activations or raw observations.

◦ Minimizes Centralized Burden: Agent policies are tuned via joint quantum measurements performed at the central server. This drastically reduces the server’s computational load, requiring it to learn only a single scaling parameter, a stark contrast to baselines that need large, centralized neural networks.

• Core Motivation: eQMARL’s mission is to exploit quantum phenomena—specifically entanglement and the quantum channel—as untapped resources. It aims to achieve faster, more efficient, and more private cooperation in complex multi-agent systems, demonstrating that quantum properties can be a solution, not just a platform, for advanced AI challenges.

As these protagonists strive to achieve their ideals, they face a formidable and multifaceted adversary that threatens to undermine their very foundations.

3. The Central Conflict: The Challenge of Heterogeneity

The primary antagonist in the saga of distributed quantum systems is Heterogeneity. Unlike in classical systems where this challenge is primarily rooted in data distributions and compute power, quantum heterogeneity is a multi-faceted foe, arising from inconsistencies in both the quantum data being processed and the quantum hardware itself. This variability can destabilize training, slow convergence, and degrade model performance, making it the critical obstacle that our protagonists must overcome to achieve robust, scalable, and practical operation.

3.1. Data Heterogeneity: The Shapeshifter

• Character Profile: Data heterogeneity in QFL manifests as differences in the quantum data representations among clients. Even if they start with identical classical information, variations in encoding or hardware can cause their quantum states to diverge, making it difficult to find a common ground for learning.

• Quantum vs. Classical Conflict: The battle against data heterogeneity in the quantum realm is fundamentally different from its classical counterpart. The unique laws of quantum mechanics introduce new rules of engagement.

• Manifestations: This antagonist appears in several forms:

◦ Heterogeneous Quantum Encoding: This occurs when clients use different methods to encode their data (e.g., amplitude vs. phase encoding) or apply different pre-treatment steps before encoding. The result is a set of inconsistent quantum state representations that are difficult to aggregate into a coherent global model.

◦ Multimodal Data: This challenge arises when different clients contribute varied types of inputs, such as quantum states from a sensor, classical text, and images. Integrating these disparate modalities can skew the global model, biasing it towards clients with richer or higher-quality data.

3.2. System Heterogeneity: The Brute Force

• Character Profile: System heterogeneity represents the brute-force challenges imposed by physical variances in quantum hardware and model architectures across the client network. No two quantum devices are perfectly alike, and these differences directly impact performance.

• Manifestations: This challenge takes shape through several physical limitations:

◦ Heterogeneous PQC Architecture: Due to hardware constraints, clients may use Parameterized Quantum Circuits (PQCs) of varying depths and complexity. This mismatch complicates the process of global model aggregation and raises fairness issues, as clients with more powerful hardware may disproportionately influence the final model.

◦ Varying Number of Qubits: The number of available qubits differs across devices, directly limiting the computational power and data representation capabilities of certain clients. This creates an imbalance in what each client can contribute to the federated task.

◦ Inherent Quantum Noise: Every quantum device has a unique noise profile, leading to inconsistent model updates. This noise comes from three primary sources: Decoherence, the loss of a qubit’s quantum state due to environmental interaction; Gate Noise, errors that occur during quantum operations due to hardware faults; and Measurement Irregularities.

To defeat this powerful antagonist, our protagonists must rely on a set of specialized allies and a well-stocked armory of strategies.

4. The Allies & Armory: Enablers and Solutions

To confront the challenge of heterogeneity and pioneer new forms of cooperation, QML frameworks cannot fight alone. They rely on a specialized set of tools and fundamental quantum properties that act as powerful allies. The core concepts of Quantum Entanglement and the Quantum Channel serve as key enablers, providing capabilities not found in the classical world. Alongside them, a growing arsenal of Mitigation Strategies provides the specific weaponry needed to combat the disruptive effects of heterogeneity in QFL.

4.1. The Mystical Force: Quantum Entanglement

• Definition: Quantum entanglement is a fundamental property of quantum mechanics where the states of two or more qubits become intrinsically linked. This connection persists even when the qubits are physically separated, meaning an action on one instantaneously influences the other.

• Role in eQMARL: In the eQMARL framework, entanglement is the crucial coordination medium. It is used to couple the split critic VQCs across different agents, effectively weaving their individual learning processes together. This allows them to implicitly coordinate their policies and learn cooperative strategies without needing to classically communicate their private observations. To establish this connection, the framework can employ any of the four fundamental two-qubit entanglement schemes known as the Bell states (Φ+, Φ−, Ψ+, and Ψ−). While all are viable, experimental results demonstrate that the Ψ+ state provides a clear advantage in convergence speed and final score, establishing it as the most effective ally for eQMARL’s cooperative mission.

4.2. The Conduit: The Quantum Channel

• Definition: A quantum channel is a communication medium that allows for the direct transfer of quantum states (qubits). Unlike a classical channel that transmits bits (0s and 1s), a quantum channel preserves delicate quantum properties like coherence and entanglement during transmission.

• Role in QFL and eQMARL: This conduit plays a vital role in both protagonist frameworks. In QFL, it enables an advanced form of model sharing where entire quantum models can be transferred via quantum teleportation. In eQMARL, it is the essential medium for the central server to distribute entangled qubits to the agents, establishing the foundational link for the split critic architecture.

4.3. The Toolkit: Mitigation Strategies for QFL

• Overview: To combat the specific data and system-level inconsistencies in QFL, researchers have developed a toolkit of mitigation strategies. These techniques are designed to harmonize clients and stabilize the federated learning process in the face of quantum-specific heterogeneity.

• Strategic Categories: The arsenal is organized into four main categories:

◦ Encoding-Level Mitigations: These strategies address Hilbert space incompatibility by aligning quantum data distributions, using techniques like Encoding-Aware Weighting to prioritize clients with more consistent data representations.

◦ Model-Architecture Strategies: To handle mismatched PQC structures or qubit counts, these approaches use methods such as Layer-Wise PQC Aggregation to ensure only compatible model layers are combined.

◦ Hardware-Aware Mitigations: These strategies account for device-level limitations like qubit counts and gate fidelities, employing techniques like Fairness-Aware Weighting to scale client contributions based on their hardware capacity.

◦ Noise-Resilient Strategies: To counter the effects of decoherence, gate errors, and measurement noise, these approaches use methods such as Noise-Aware Aggregation to weight client updates based on their inverse noise variance, giving more credence to updates from stable devices.

Armed with these allies and tools, our protagonists can be instantiated in the real world as concrete, high-performing frameworks.

5. Featured Players: The Frameworks in Action

The abstract concepts and strategies come to life through specific, “featured” characters—fully realized frameworks that demonstrate how these components are assembled into functional, high-performing systems. SPQFL and the eQMARL architecture serve as two such concrete examples, each showcasing a successful implementation that directly addresses the core challenges of its respective domain.

5.1. SPQFL: The Resilient Hero

• Identity: The Sporadic Personalized Quantum Federated Learning (SPQFL) protocol is a case study in resilience, specifically designed to tackle the twin challenges of quantum noise and non-IID data distributions that plague real-world QFL deployments.

• Key Abilities: SPQFL’s success stems from two primary design choices that allow it to adapt and thrive in heterogeneous environments:

1. Regularization-Based Personalization: This mechanism penalizes overfitting on local client data, which helps to stabilize the training process for clients with diverse data distributions and prevents their local models from diverging too far from the global objective.

2. Sporadic Learning Mechanism: This adaptive filter selectively allows only those clients who meet a minimum validation accuracy threshold to participate in the global aggregation. This prevents noisy or sub-par updates from low-quality devices or difficult data splits from polluting and degrading the shared global model.

• Accomplishments: The combination of these abilities leads to significant performance gains. Compared to a regular QFL baseline, SPQFL demonstrates superior accuracy across multiple benchmark datasets, achieving improvements of 3.03% on MNIST, 2.51% on FashionMNIST, 3.71% on CIFAR-100, and 6.25% on Caltech-101.

5.2. The eQMARL Architecture: The Cooperative Team

• Team Roster: The eQMARL framework operates as a tightly integrated team, with each component playing a specialized role to achieve novel, entanglement-driven cooperation.

◦ The Decentralized Agents: Stationed at the edge, these are the actors of the system. They interact with the environment, execute a local actor policy, and encode their local observations into their respective branch of the quantum critic.